Industrial Embedded Articles

LabVIEW hardware interfacing and integration

LabVIEW hardware interfacing and integration

With NI hardware or non-NI hardware

LabVIEW is a dominant development platform for all types of automated measurements and control. Developers of automated systems, such as test systems, need to interface with hardware. Examples range from basic digitizing of analog values to controlling a telescope. With the huge array of hardware options, you may be wondering if LabVIEW can interface to absolutely any type of hardware. This article reviews why LabVIEW is such a good development platform for hardware interfacing, based on our decades of using LabVIEW in a wide range of test systems.

In this article we’ll go over:

- Common use cases for interfacing / integrating LabVIEW with hardware

- Tips for interfacing / integrating with hardware

- Lessons learned / gotchas when interfacing / integrating with hardware

- Next steps if you’re looking for help

Common use cases – why do people interface LabVIEW with hardware?

There are two main reasons for using LabVIEW to interface with hardware.

- LabVIEW natively supports so many types of hardware from NI and is designed to support hardware made by other vendors. In fact, most vendors offer LabVIEW drivers with their hardware since LabVIEW is so commonly used.

- Many systems (e.g., automated test, industrial embedded) need to interface with the real world by taking measurements, controlling actuators, or communicating with other hardware.

No single supplier of hardware for measurement and/or control can satisfy all needs. You may have:

- unique data acquisition sample rate needs,

- schedule or budget constraints that require effective implementation,

- uncommon measurement needs,

- or unique sensor types.

LabVIEW offers an open and flexible development platform to handle all these scenarios.

Tips for interfacing LabVIEW with hardware

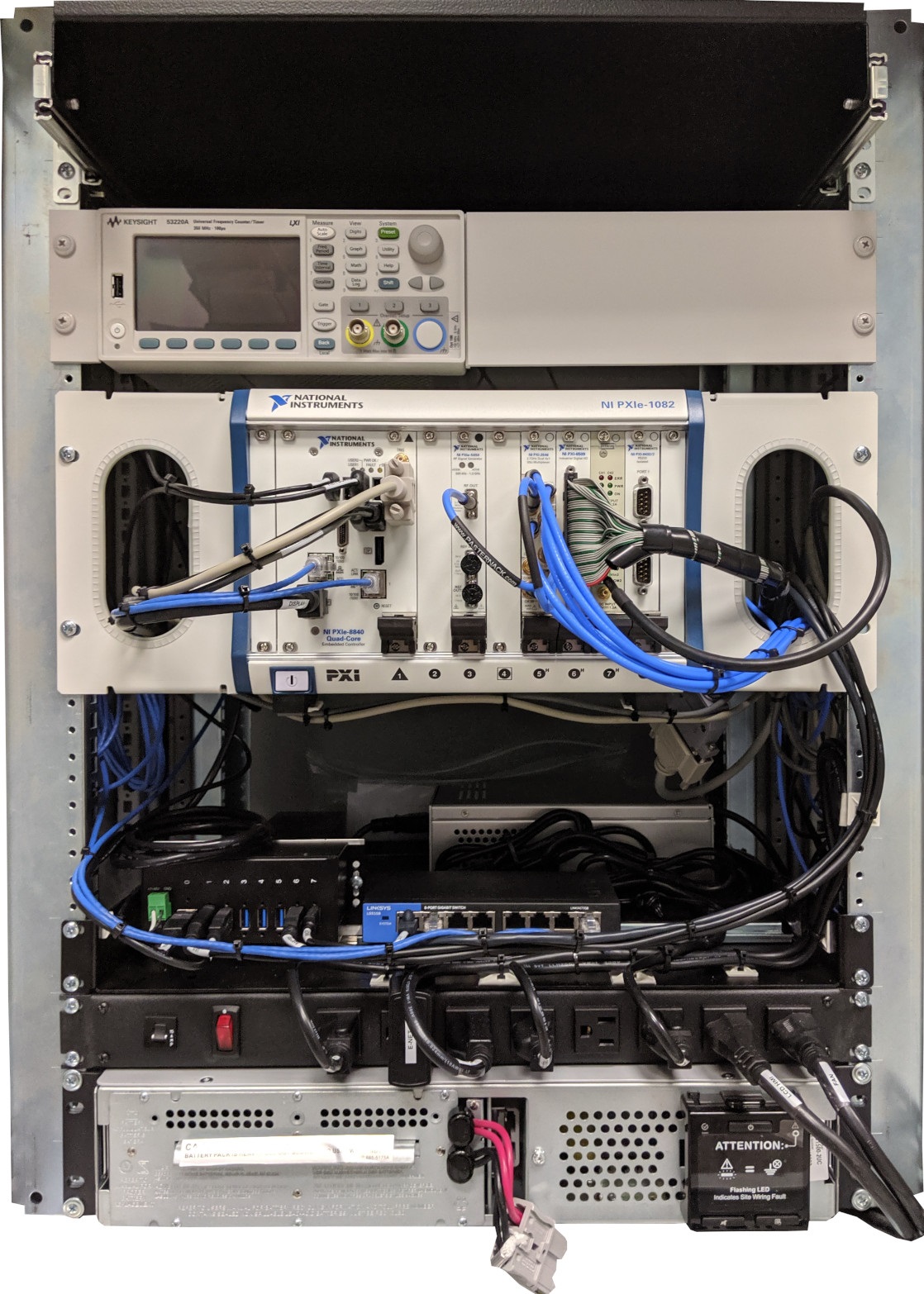

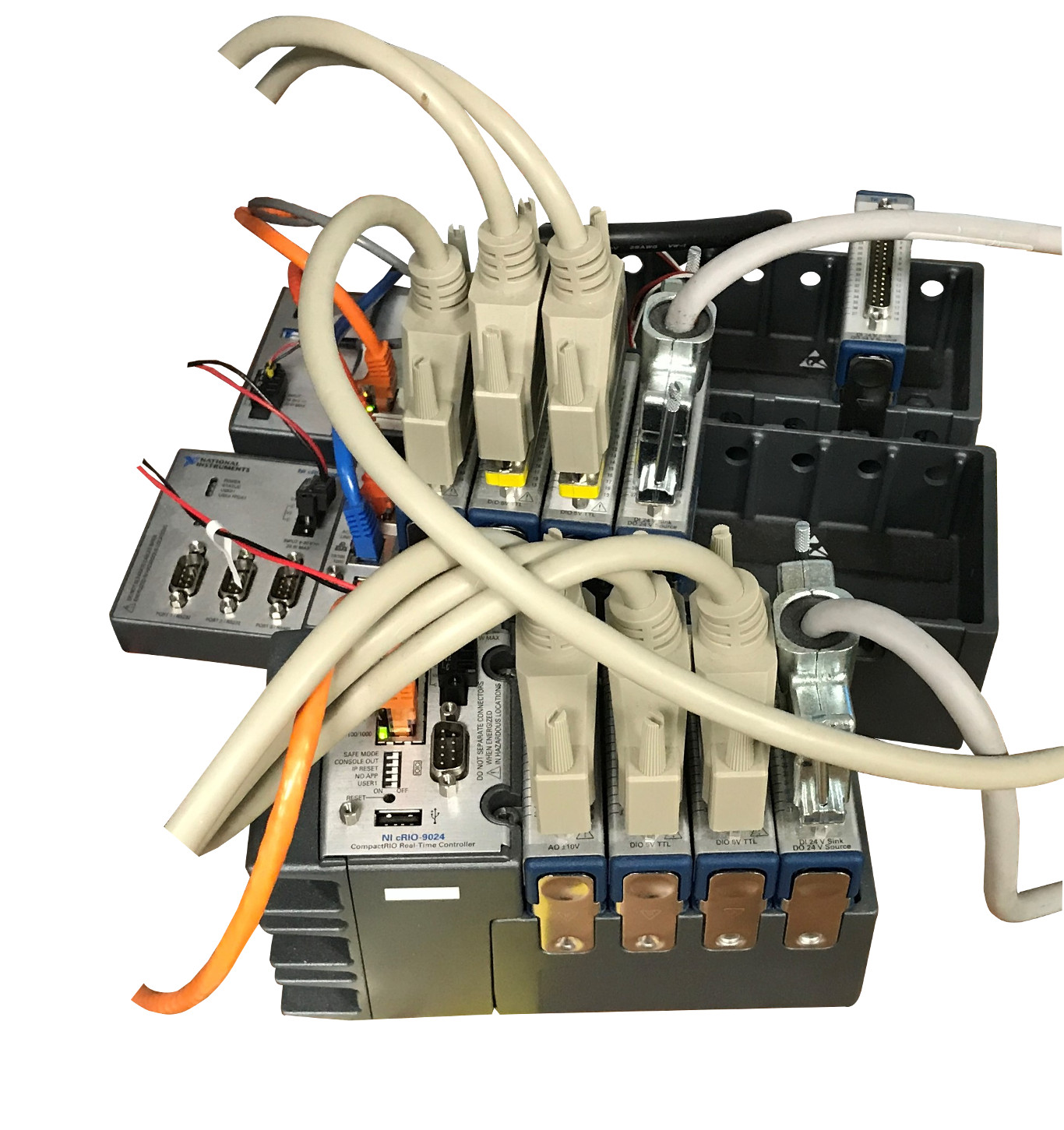

LabVIEW works natively with hardware offered by NI, mainly through the DAQmx drivers or, if you’re using NI’s CompactRIO hardware, through the additional methods of Scan Mode and FPGA nodes. This support is the primary reason that most LabVIEW developers choose NI hardware: it’s just easier to use.

The rest of this section discusses using LabVIEW with non-NI hardware.

LabVIEW drivers

The first step you should take will be to check if the hardware vendor has LabVIEW drivers for the instrument you need.

It’s rare to find a vendor that doesn’t have LabVIEW drivers.

If a driver is available, we recommend that you check that the driver supports the functionality you need in the application you’re developing.

For example, some drivers offered by vendors don’t work well if you have several of the same instrument in one system. Or the driver might be slower to respond than you’d like. In these cases, you might be able rework the example code offered by the vendor to make it do what you want, or you may have to write your own driver.

Hardware interface connection schemes

Review the connection scheme. Almost all instruments support an interface using messages sent over a communications protocol.

Common protocols (in no particular order) include:

- GPIB: a solid standard still in use, but being replaced with Ethernet

- RS-232 serial: a persistent standard even though it’s low-level and somewhat finicky to set up

- Ethernet: very capable, but connect such instruments only to your PC or controller to avoid IT concerns about hanging an unknown device off the corporate network

- USB: also capable and easy to setup, but may not be as reliable as Ethernet

Note that the VISA standard supports many of these protocols.

If the vendor doesn’t have a LabVIEW driver, you’ll need to learn the interface for the instrument for commands sent to, and responses sent by, the device. You may be surprised by the huge extent and intricacies of the entirety of the messages and their formatting needs. Drivers for some non-NI instruments are not at all trivial, e.g., the 160+ pages of commands for a Keysight signal analyzer.

Best methods to interface LabVIEW with instruments

The best method to consider when interfacing LabVIEW to instruments is VISA, which is a common abstracted bus interface for instruments. VISA supports many bus protocols such as GPIB, USB, and Ethernet communications.

If the instrument does not have a VISA driver for LabVIEW, you can still interface to the device using direct calls for whichever protocol the instrument supports. For example, if the instrument supports Ethernet, you’d open an Ethernet port and read/write messages to the IP address of the device.

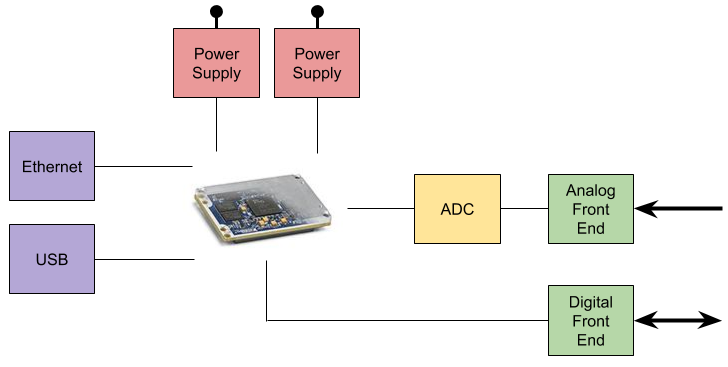

Interfacing LabVIEW with custom hardware

The need to develop a driver for custom hardware is rare and specialized. The effort depends heavily on what the custom hardware does and how communication is handled.

There are 3 approaches worth considering:

- First, the custom hardware might be controlled and monitored via analog or digital signals, which would be handled by an appropriate NI card(s) via the DAQmx driver.

- The next level of complexity would be a device with communication to LabVIEW via RS-232, Ethernet, and so on. Then you could use LabVIEW’s tools (e.g., VISA) to talk with the device.

- Finally, a plug-in PXI card in a chassis with a Windows-based controller. This would require significant software development for the operating system (OS): first just to recognize the card so that the OS would recognize it, and then second, low-level drivers that follow the development needs of the driver model so that LabVIEW could talk to it.

Alternatives to LabVIEW for hardware interfacing

LabVIEW has a much more robust and developed set of tools and a development environment than other languages, especially for NI hardware.

However, both Python and C# (and by extension C and C++), and other languages too, have ways to interface with just about any type of hardware. For example, NI offers Python wrappers for DAQmx to support their hardware. For non-NI hardware, you may be able to find drivers or wrappers for your preferred development language or you would drop back to using low-level calls or communications protocols and building up your driver from scratch.

Lessons learned & gotchas to look out for when interfacing hardware with LabVIEW

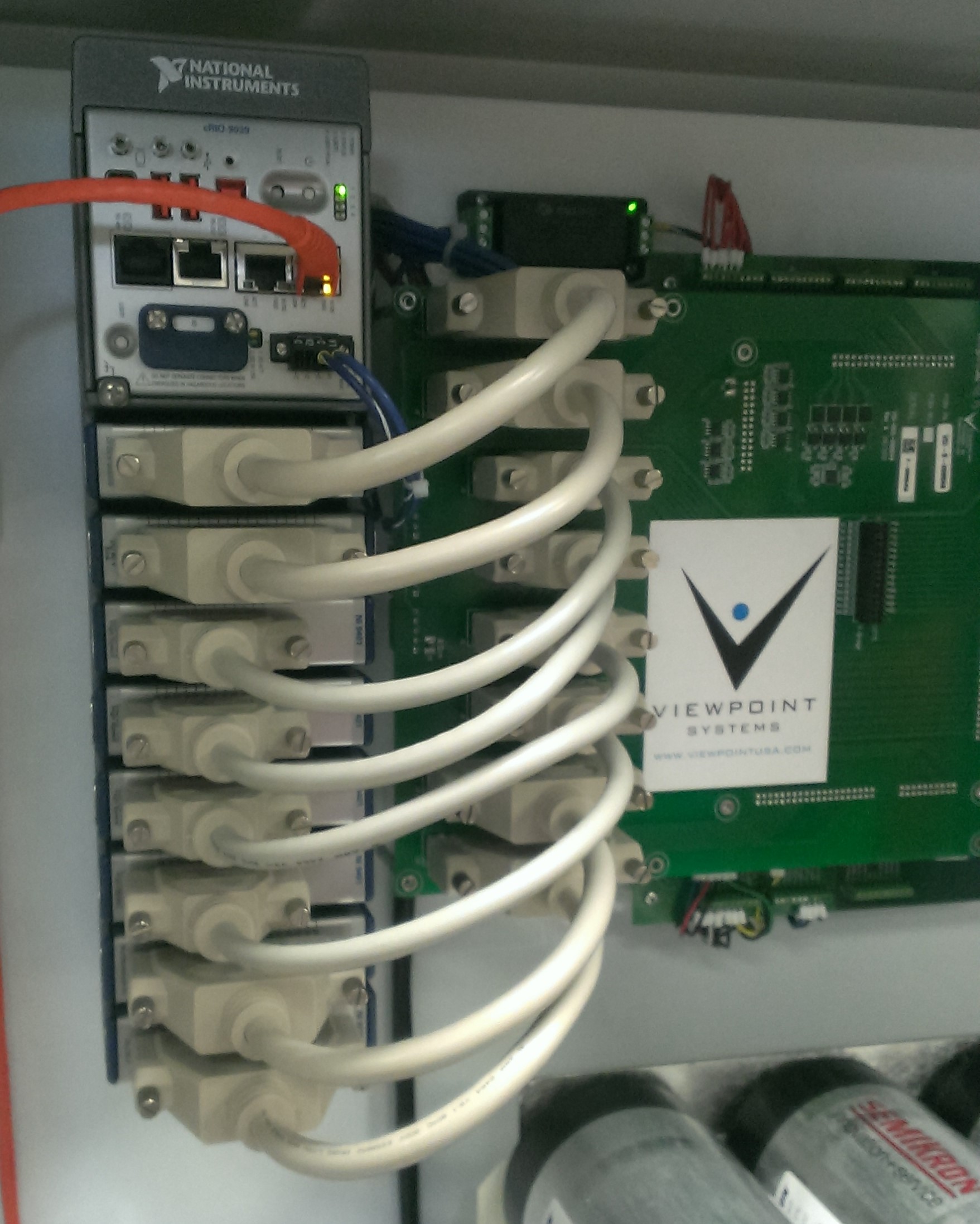

At Viewpoint, we’ve interfaced to countless pieces of hardware over decades, most from NI, but many times from other vendors due to the wide range of test systems we’ve developed and delivered to customers with incredibly diverse measurement and control needs.

Some of the more impactful gotchas to keep in mind when interfacing LabVIEW with hardware are:

- Using the hardware in ways that are incompatible with the desired measurements or control.

- Using hardware outside of its specs.

- Underestimating the development time.

- Forgetting to save and restore the instrument configuration.

Some details regarding each of these:

Using the hardware in ways that are incompatible with the desired measurements or control

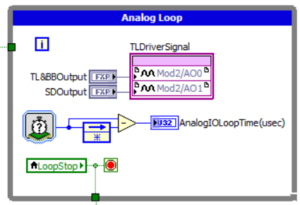

- Doing single point updates/reads when buffered I/O is needed to keep up with the desired rate.

- Incorrect configuration of synchronization across I/O channels.

- Acquiring/outputting more data than can be handled by the PC/controller.

- An example of a nitpicky but head-scratching detail is the mixing Delta Sigma (DS) and successive approximation (SA) A/Ds. Since DS converters delay their output relative to SA, skews in signal timing appear.

Using hardware outside of its specs

- Unattainable sample/update rates, whether too slow, fast or between allowable rates.

- Out of range or under range inputs can lead to acquired signals that are clipped, or so low in amplitude that only a few of the quantized bits are utilized.

- Out of range outputs can result in non-functioning actuation. Perhaps the more prevalent issue in this item is driving a digital output from the hardware into a unit under test (UUT) that expects more current than the hardware can provide. We’ve seen this issue with some of our customers trying to use an analog output to control a relay, but it can also happen with digital outputs. Also, too much current or too high a voltage can destroy the UUT.

Underestimating the development time

- If the driver exists: the time needed to learn and utilize the driver can be much more than initially expected; some vendor drivers are complicated.

- If the driver doesn’t exist: the time needed to learn the instrument‘s interface and develop a driver can be daunting.

Forgetting to save and restore the instrument configuration

- If the instrument resets after a power cycle, the configuration might return to factory setting, rather than what you expected to make your measurements for your test.

- If the instrument configuration is changed by another application or by pressing some manual buttons or menu items, the instrument needs to be returned to the configuration you expect before your test proceeds.

Next Steps

If you’re trying to select test hardware and feeling overwhelmed by NI’s massive catalog of hardware options, check out our NI Hardware Selection Services.

If you’re in learning mode, here’s some info that you might find useful:

- NI Hardware Compatibility – real-world tips and lessons learned

- What should I keep in mind when I’m launching a new product and considering a custom manufacturing test system?

- What should I keep in mind when I’m launching a new product and considering a custom product validation system?

- Using LabVIEW for automated testing

- Tips for improving manufacturing test with advanced test reports

- Tips for improving design validation with advanced test reports

- How to improve a manual testing process

- Hardware testing process – How to test products during production

- Design validation testing with LabVIEW

- Hardware test plan for complex or mission-critical products

- Product testing methods for industrial hardware products

- Which NI platform best fits my automated test needs? cRIO, PXI, cDAQ?

- Instrument Control with LabVIEW

- How to prepare for when your test team starts to retire

- Practical manufacturing test and assembly improvements with I4.0 digitalization

- What to do with your manufacturing test data after you collect it

- 5 Keys to Upgrading Obsolete Manufacturing Test Systems

- How Aerospace and Defense Manufacturers Can Make the Assembly and Test Process a Competitive Advantage

- 9 Considerations Before you Outsource your Custom Test Equipment Development

- Reduce Manufacturing Costs Report

- Improving Manufacturing Test Stations – Test Systems as Lean Manufacturing Enablers To Reduce Errors & Waste

LabVIEW Remote Monitoring

LabVIEW Remote Monitoring

6 ways to do remote monitoring with NI LabVIEW

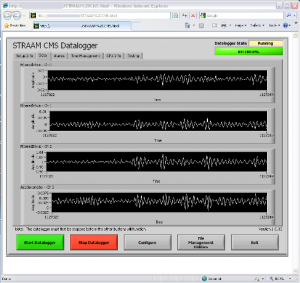

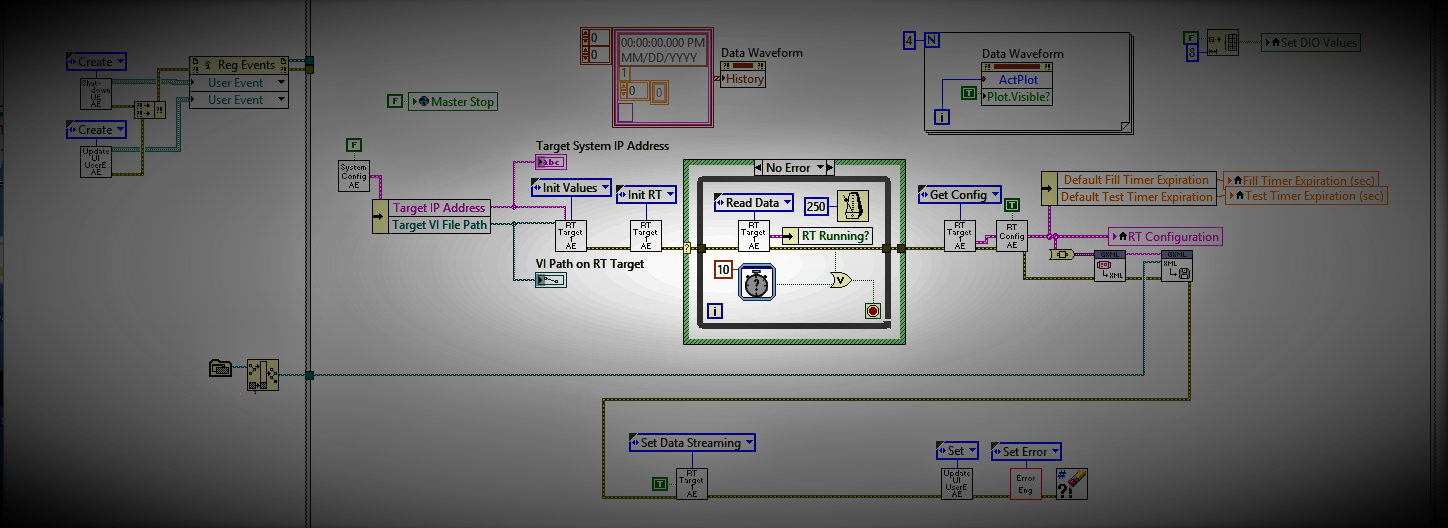

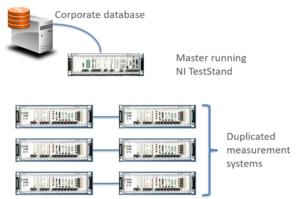

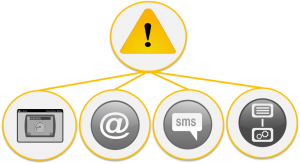

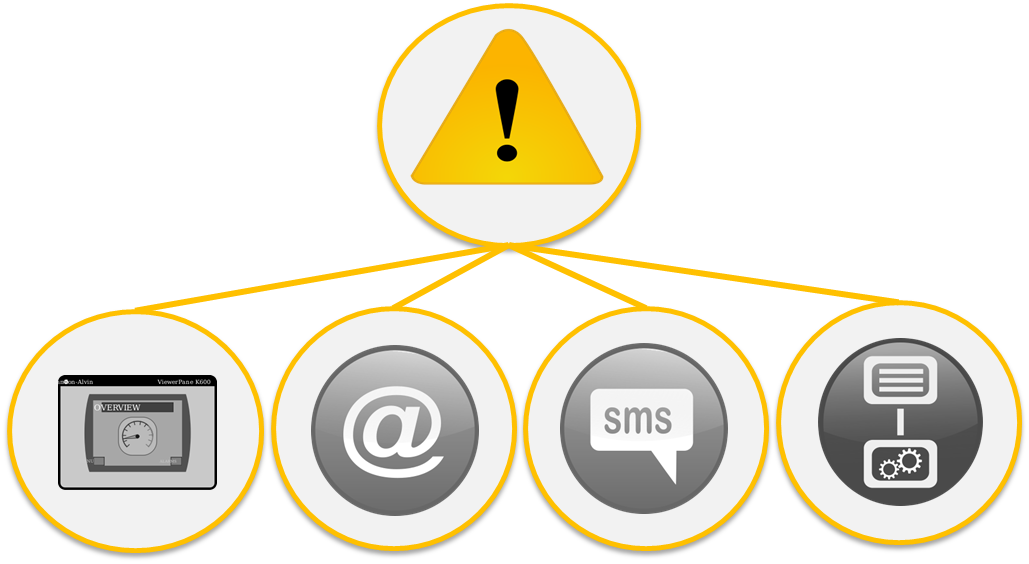

In this article, we define LabVIEW remote monitoring as acquiring measurement data from a distant location (e.g., the other side of a factory, a different building, or halfway around the world) from where the data is to be analyzed/utilized. The data might simply be viewed before later use or it might be written to a database for further analytics.

So what might you want to remotely monitor and from where?

- Test equipment on the factory floor from your office

- Power generation equipment at some remote customer site halfway around the world

- A product out in the field back at corporate

Since this article was originally published in 2018, NI’s support for web-based application has exploded. Some of the original methods we originally discussed, such as LabVIEW Remote Panels, have been superseded, while others, such as LabVIEW Raw TCP functions, remain.

We’ve also removed NI InsightCM, since this platform has been transferred to Cutsforth and specialized to various specific monitoring applications.

While reviewing the methods below, keep in the back of your mind how you are going to manage the datasets your remote system will be sending you. So much work has been done to SQL databases and other supporting tools since we originally wrote this article that we strongly recommend you consider using a database to help organize and manage your data.

Here are the updated 6 methods for remote monitoring with LabVIEW:

- Windows remote desktop

- Raw TCP functions in LabVIEW

- LabVIEW network streams

- LabVIEW Web Server

- NI WebVI – G Web development

- NI SystemLink

Caution: there are lots of different options (with varying degrees of completeness) to use here. If you don’t know what you’re doing, you can end up with a non-working or at least a very error-prone application.

Windows remote desktop

What is it in a nutshell?

You can interact with a LabVIEW application that is running on a remote PC. You connect to that remote PC by using the Remote Desktop Protocol (now called Remote Desktop Connection) widely available since Windows XP.

What remote monitoring scenario is it best suited for?

If all you need to do is interact with an application and visualize graphs, remote desktop works well. However, control of this remote machine can only be done manually, just as if you were at the remote location sitting in front of the PC.

What should you know about it?

Transfer of data to another PC and control of the remote PC are manual processes. Also, the firewall configuration setup by IT may precent connecting to the remote PC.

Suggested LabVIEW Developer Level

Novice

Raw TCP functions in LabVIEW

What is it in a nutshell?

Yep, you can talk raw TCP in LabVIEW.

What remote monitoring scenario is it best suited for?

This method generally only makes sense when you want to create a custom messaging scheme on top of TCP, but with some of the other libraries available (like NI’s AMC), it’s best not to create your own unless it is required.

What should you know about it?

You’ll have a lot of control, but also a lot of opportunity to make mistakes.

Suggested LabVIEW Developer Level

Intermediate

LabVIEW Network streams

What is it in a nutshell?

A built-in method that provides a way to share data across a network. This capability is a step up from raw TCP and, while not as flexible, it is easier to use. A good overview about LabVIEW Network streams can be found on NI’s website. A very high-level explanation is also available.

What remote monitoring scenario is it best suited for?

Network streams do a good job of streaming lossless LabVIEW data from point to point over a network and is built using TCP and UDP.

What should you know about it?

This method is more accessible than raw TCP, but you’re going to have to write a lot of client/server processes. And, since this method is strongly focused on moving data, you might also perhaps want to consider having a web UI or the raw TCP functions to interact with the data sending application.

Suggested LabVIEW Developer Level

Intermediate

LabVIEW Web Server

What is it in a nutshell?

This service manages HTTP requests from LabVIEW VIs and other apps, such as written with HTML and JavaScript, to call LabVIEW VIs. The responses from those VIs need to be of a form that can be rendered by a browser or any other app that can consume the data served up by those requests.

What remote monitoring scenario is it best suited for?

Any time you wish to have a thin client on a remote PC, such as a browser, and wish to have LabVIEW VIs compose the responses.

Compared with LabVIEW WebVIs, discussed next, using the LabVIEW Web Server may be a simpler deployment and offer more flexibility, since you are closer to the raw HTTP messaging, but you have to do more development work to get to the same features that the WebVIs offer.

What should you know about it?

You’ll need to know about HTML, JavaScript, C#, and likely some of the many pre-developed libraries to build the code that creates the HTTP requests (e.g., GET method) and handles the responses.

If this approach sounds interesting, spend some 30 minutes with this overview video to learn more. Alternatively, consider working with a knowledgeable web developer to provide the web coding side of your project. With that path, this web work can be done in parallel with the LabVIEW coding and, if you’re not familiar with the web tool chain, you won’t have to educate yourself.

Suggested LabVIEW Developer Level

Advanced

LabVIEW WebVI – G Web development

What is it in a nutshell?

The G Web development application offers web development with familiar LabVIEW-like coding. At a basic level, the application builds the HTTP and JavaScript code that you’d have to write using the LabVIEW Web Server approach.

What remote monitoring scenario is it best suited for?

This approach is best applied to complicated monitoring applications or situations where you need to develop multiple web apps.

The WebVIs can interface with the LabVIEW Web Server. They also interface well with NI SystemLink, which is designed for monitoring the operational status of many machines. See below for an overview of SystemLink.

What should you know about it?

LabVIEW WebVI, also known as G Web Development on NI’s web pages, is an outgrowth of LabVIEW NXG. On some of NI’s webpages about WebVI, you may still see references to NXG, but NXG stopped being developed past 2022.

This development environment is only LabVIEW-like, and the dissimilarities with LabVIEW will require you to learn a new environment. Balance that fact against the knowledge ramp you’ll have to climb using the more basic Web Server approach.

An overview page of WebVIs on NI’s website holds additional details. And, NI has provided several examples such as using the NI Web Server and SystemLink Cloud, calling 3rd-party services like USGS data and JavaScript, and an application that uses multiple top-level VIs.

A recommendation would be to learn to do LabVIEW WebVI dev if you intend to make several web apps, since you’ll become faster at development with each additional app. You might also consider combining WebVI development with the web development environment embraced by professional web developers, which use HTML, JavaScript, C#, and so on. By combining your efforts with those of a professional web developer, you could focus on what you are good at and leave the web side to what they are good at.

Suggested LabVIEW Developer Level

Intermediate and Advanced

NI SystemLink

NI SystemLink is a platform for managing distributed systems in test and measurement. The platform is designed to remotely monitor variables from various PCs using the SystemLink UI. We’ve not familiarized ourselves extensively with SystemLink, but it may be worth looking into for applications that need to manage multiple data acquisition devices, querying and displaying device operational status, retrieving the status of the machine to which the device is connected, and transferring data to a central data store. And, it works tightly with the LabVIEW WebVI technology, so using both together may be the approach you are seeking.

What is it in a nutshell?

SystemLink is a piece of software developed by NI. Learn more from NI here: What is SystemLink? – National Instruments.

What remote monitoring scenario is it best suited for?

SystemLink is intended for use on test and embedded applications running on NI hardware for the purpose of publishing status and operational data for remote users. SystemLink can be deployed on-premise or in the cloud.

Coupled with LabVIEW WebVIs, complex remote visualization, control, and data management is available.

What should you know about it?

SystemLink is a complex platform, the learning curve is steep. Since this article is about remote monitoring, an important aspect of SystemLink is its ability to interface with LabVIEW WebVIs (a.k.a. G Web Development) for the purpose of developing UIs for interfacing to the remote PCs.

Some interesting links to learn more are:

- SystemLink Cloud Overview – National Instruments

- How Do I Create Custom Dashboards and Remote Operator Interfaces with the SystemLink Software Configuration Module? – National Instruments

- Calling SystemLink Data Services

Suggested LabVIEW Developer Level

Intermediate, Advanced, and Expert depending on the complexity of the application

Hardware required

Of course you’ll need to connect to or create a network of some sort (or at least a point-to-point link). Here’s some hardware that could be used to help you get connected remotely:

- Ethernet port & network

- Wi-Fi enabled device (e.g. http://www.ni.com/en-us/shop/select/c-series-wireless-gateway-module)

- Cellular enabled device (e.g., https://shop.sea-gmbh.com/SEA-9745-4G-Mobilfunk-Kommunikationsmodul-Kit/60000070-SEA-9745-Kit). You may need to contact your cellular provider to enable this device to connect to the cell network.

- Proprietary wireless communication systems for long range or high-speed communications (e.g., https://www.gegridsolutions.com/Communications/licensedSolutions.htm )

Next Steps

Once the remote, likely web-enabled, LabVIEW application is running, you will eventually want to consider the steps associated with handling all the remote data you will be accumulating. An approach that had proven itself over many decades is to use a database. The database empowers you with searching, sorting, and filtering capabilities to help you find, review, and process the selected data.

Not all raw datasets, such as large waveforms, need to reside in the database. In fact, it probably shouldn’t for reasons of database size and responsiveness. Regardless, we recommend storing at least enough baseline information to give you the means of locating all the large datasets not stored in the database that meet the query criteria.

Database design and interfacing is another whole topic not covered here. Many web developers also have skills in databases.

To learn more about LabVIEW-based remote monitoring with or without a web interface, check out these case studies:

- Industrial Equipment Remote Online Condition Monitoring

- Condition Monitoring – Improving the Uptime of Industrial Equipment

- Condition Monitoring for Electric Power Generation

- Remotely Monitoring Electrical Power Signals with a Single-Board RIO

- Industrial Embedded – Industrial Equipment Control

- Industrial Embedded Monitoring – Remote Structural Health Monitoring using a cRIO

- Online Monitoring of Industrial Equipment using NI CompactRIO

How to get help

If you work for a US-based manufacturer, looking for help with LabVIEW-based remote monitoring and possibly data management, you can reach out here.

6 Questions to Ask before you Buy a Standalone Data Acquisition System

Standalone Data Acquisition Systems

6 Questions to Ask yourself before you Buy

Do you need a standalone data acquisition system (DAQ) to measure a bunch of analog and digital signals? Here’s 6 things to think about before you pick one.

How long does it need to run for without interruption?

Days? Weeks? Months? Years? The duration you need will impact your choice of hardware & operating system more than anything else. If the processor is stressed keeping up with tasks, then there is a larger chance that data flow will get backed up and errors will occur from a buffer overflow. Spinning hard drives have moving parts and will wear out. Even the internal system time battery will cease to function after a decade or so (maybe sooner).

When it crashes, do you need it to auto-restart, call the mothership, or is there no serious need to recover from a crash?

Will it have access to a power source, or does it need its own power source?

If the standalone DAQ has access to prime power, that’s obviously a much easier scenario than having to supply your own portable power, like from a battery, solar panels, or some combination of various sources. Power and energy utilization calculations can get tricky for multi-mode operation or any scenario where the power draw varies significantly. Be conservative, take power measurements under real-world operating conditions, and consider temperature and cycle de-ratings for battery-based applications.

Does the DAQ need to be able to transmit data remotely from the field or a plant, or is local storage sufficient?

If you’ve got access to a fiber link or copper Ethernet, great. If not, this challenge can be often be met if:

- There’s access to a cellular tower or Wi-Fi network (there are other public and private radio alternatives as well if needed).

- EMI (Electromagnetic Interference) levels are below required thresholds for the link. If you really care if your link works, an RF site survey is warranted.

- The DAQ bandwidth needs are less than what’s available from the link.

Something to think about if you do have this need is whether the DAQ system needs store-and-forward capabilities to buffer acquired data when the link goes down and continue sending once the link is back online. And, how much storage would you need? You should consider a lower rate data stream, such as Modbus TCP, to uplink features computed from the raw data, so you at least have a view on status even if transmission bandwidth is low.

If local storage is sufficient, you’ll still want to think about what happens when the memory gets corrupted, loses sectors, or dies completely. How much data are you going to lose, and do you need to mitigate with redundancy techniques like memory mirroring. See our article on LabVIEW remote monitoring for more info.

Do you need to be able to access the data acquisition system remotely to configure it or check on its status?

Maybe you need to be able to modify one of the pre-trigger acquisition parameters, or maybe you find that you need to bump up the data acquisition rate, but your DAQ system is either in an inconvenient-to-access location in the plant, or maybe it’s half way around the world.

Or maybe you know some important event is happening in the next hour, and you want to view the status to make sure the DAQ is operational.

There are several ways to enable remote access to your DAQ. Depending on your specific needs and the hardware you select, you may be able to utilize built-in software utilities, or you may need custom software developed. See our article on LabVIEW remote monitoring for more info.

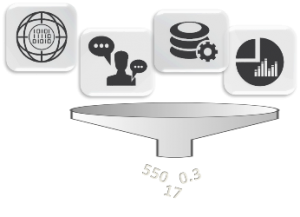

Do you need to simply acquire raw data, or do you need that data to be processed in some way post-acquisition?

Maybe you just need raw digital data to analyze on your PC after it’s acquired. But you may run into bandwidth or storage space issues if you keep everything you acquire, or maybe that extra data will just increase your processing/analysis time more than you can tolerate.

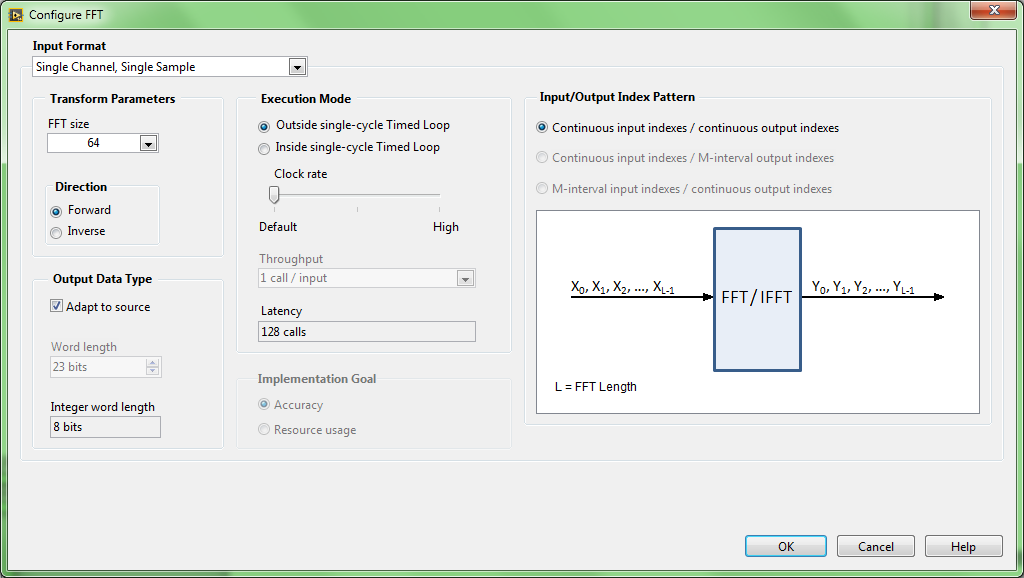

You may want to do some filtering, windowing, or maybe even some time- or frequency-domain processing before you record the data you’ve acquired. Depending on the amount of number crunching you need to do, a basic CPU may do the trick, or you may need a dedicated number crunching processor like a DSP, a multicore processor, or an FPGA.

What does your input channel list look like?

The biggest driving factors here will be related to:

- Sample rates – if your data changes very slowly and you only need to acquire data at a few samples per second, that’s a very different animal than collecting at hundreds of kS/s or even MS/s.

- # of signals/channels to acquire data from – are you just trying to measure a few TTL levels or 0-10V analog input voltages, or are you trying to measure temperature, vibration, force, pressure, etc. on dozens or hundreds of channels?

- Min/max/range of signals – this is where signal conditioning really comes into play. If you need to measure µA or mV, you’d better understand how to appropriately amplify, filter, and isolate that signal before it becomes a digital value.

- Synchronization – do some or all of your channels need to be digitizing measurements at the same time or can you tolerate some lag between samples across channels?

Do you like examples? Here are some case studies of standalone data acquisition systems we’ve developed:

If you’d like to chat about your standalone data acquisition system needs, you can reach out to us here.

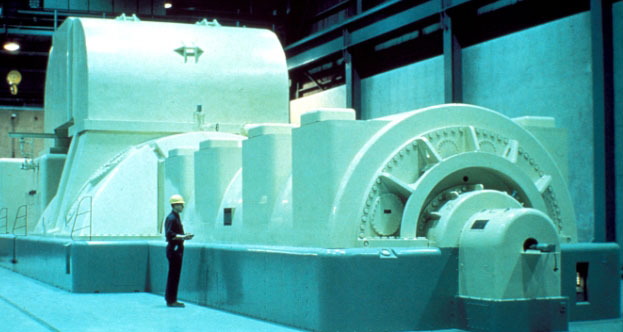

Continuous Monitoring & Data Acquisition from Large Industrial Equipment

Continuous Monitoring & Data Acquisition from Large Industrial Equipment

Why do it. How to do it. Gotchas. How to get help.

What are we talking about here?

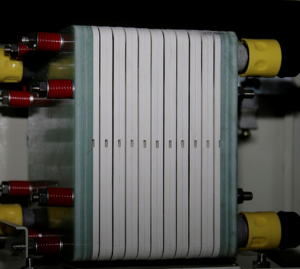

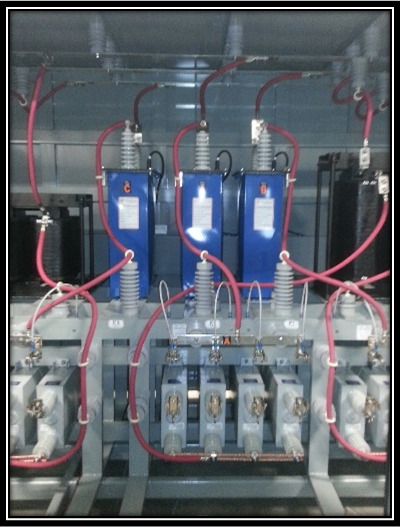

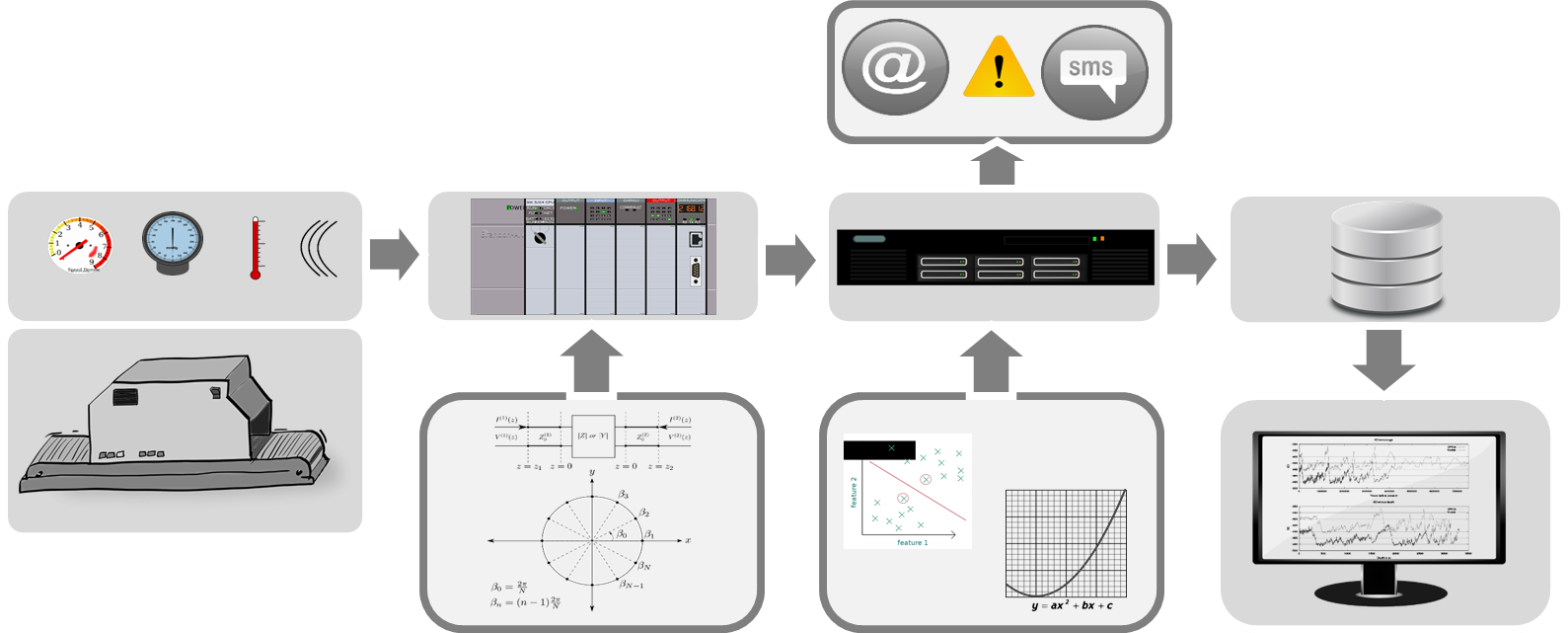

We’re essentially talking about:

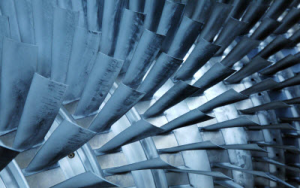

- Mounting some data acquisition hardware on a piece of industrial equipment and connecting various sensors to take measurements (e.g., temperature, pressure, current, voltage, vibration) from the equipment.

- Processing and sending the acquired data from the data acquisition hardware to some other location either in the building or half way around the world.

- Analyzing the data (either by hand or automatically) to make better decisions about the industrial equipment.

What better way to illustrate what we’re talking about then by showing a few case studies.

Why do it?

Generally, you’ll be interested in monitoring large industrial equipment because you want to better understand and track the health or condition of the industrial equipment, in order to spot trends in operating parameters that indicates reduced performance or even imminent damage.

How to do it

At a high level, you’re generally going to need to figure the following out:

- Make sure you have a business case for doing this monitoring, whether qualitative (e.g., customer satisfaction, ISO 50001) or quantitative (e.g., uptime, maintenance, lost production revenue).

- Determine what properties (e.g., vibration, temperature, current) make sense to monitor from your industrial equipment.

- Select the algorithms needed to compute features or trend those features. This may require proper data to be collected, depending on the sophistication of the algorithms.

- Select appropriate sensors and acquisition hardware to collect data at the required rates, range, sensitivity, and synchronization.

- Develop custom software or install a COTS application to process the data and send it off for analysis.

- Install and test the online monitoring system.

- Start collecting data for analysis at a small scale, show success, and iterate.

Gotchas to watch out for

- Remotely debugging is challenging. Be sure to include various event & error logging functions.

- Remote re-start when the monitoring system goes down.

- Remote reconfiguration of channels, acquisition parameters, and analysis.

- Not capturing enough training data to be able to massage the algorithms in order to give you the confidence that the monitoring system can detect fault conditions.

- Voiding the warranty on the industrial equipment.

- Safety concerns – do you have appropriate controls and safeguards in place?

- Cybersecurity – what is the monitoring system connected to, and what vulnerabilities did you just open up?

Depending on the amount of data being collected, you may also want to consider how you will assess any anomalous situations. If you are already performing route-based data collection, then your existing assessment processes will be adequate if you continue to collect data at the same volume. More likely you will collect more data, because it’s easy and incremental costs are insignificant, leading to a situation where you will either need to add staff to assess the extra data or you will have to automate the assessment process to help prioritize issues. If you’d like help creating an online monitoring system for your industrial equipment, reach out for a consultation.

LabVIEW Data Acquisition

LabVIEW Data Acquisition

Gotchas, tips & tricks, and how to get help

What is it and how’s it used?

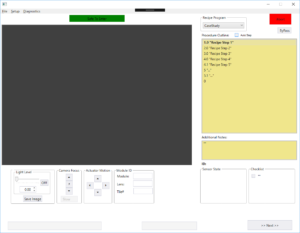

LabVIEW-based data acquisition involves writing software on top of appropriate hardware to acquire data from various sensors (e.g., temperature, pressure, current, …). Those data are then usually manipulated and/or filtered before being displayed and/or recorded for further analysis.

LabVIEW is a software development environment created by National Instruments. Originally it was focused on taking measurements from various lab instruments, but it’s expanded drastically from its inception. Strictly speaking, LabVIEW is not a coding language, it’s a development environment. The language is actually called “G”, but most people refer to LabVIEW as if it’s a language (i.e., most people would say “it’s coded in LabVIEW”).

If you’re curious what sorts of acquisition LabVIEW is used for, check out this resource, which covers the 4 main uses for LabVIEW.

Gotchas:

Assuming that just because it’s easy to get started it’s also easy to finish. LabVIEW is a very complex programming environment. The good news is that there’s not much you can’t do with it. The bad news is that you have the freedom to get yourself into a lot of trouble (e.g., having sluggish user interfaces, dropped communication packets, acquisition buffer overruns, files that grow too large, creating control loops that won’t make timing, or have too much cycle-to-cycle variation).

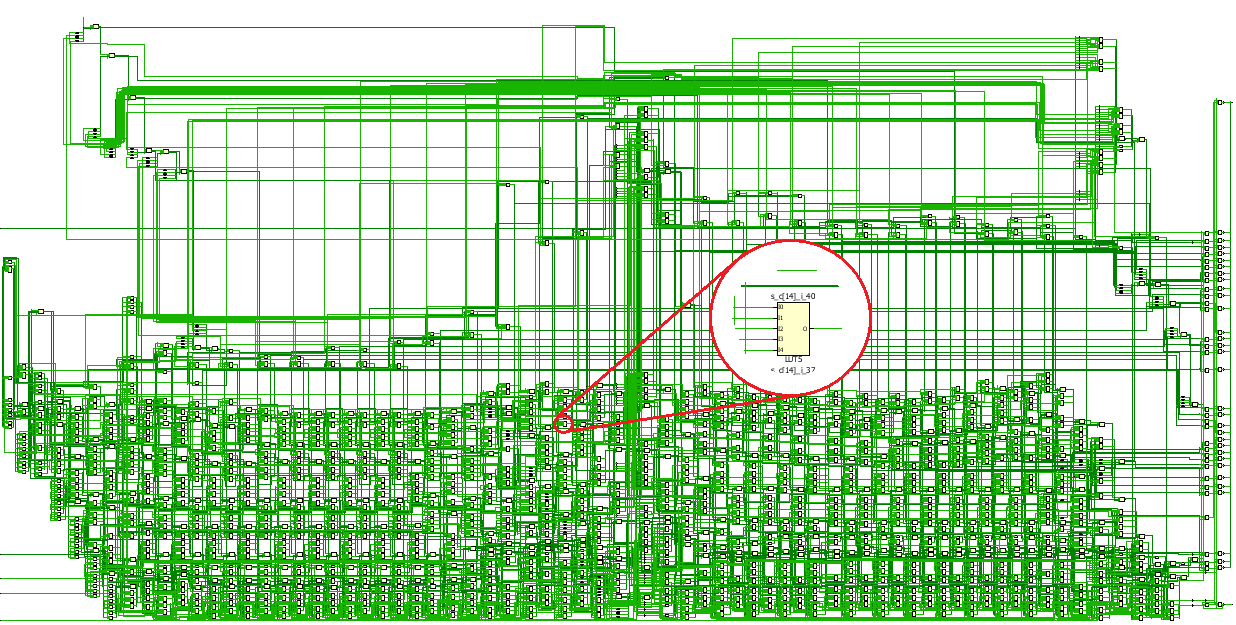

Not organizing your LabVIEW code. Trust me, you won’t want a gigantic rats nest to try to debug, make updates to, or pass off to someone else in the future. With all those wires, LabVIEW brings graphical meaning to spaghetti code!

Hooking up a strain gauge or low voltage source (such as a thermocouple) sensor without signal conditioning. There are others. Signal conditioning is hugely important to be able to recover your signal(s) of interest.

Coding without requirements. It’s obviously more fun to just start banging out some code, but you’ll probably regret not taking a step back to at least jot down a bulleted list in a doc. Why? Because:

- You’ll want to know what you’re going to test in order to prove to yourself the thing works.

- It makes it more apparent if you’ve got conflicting requirements.

- It’ll help you think of other features you wanted but forgot about at one point or another.

If you’d like to start considering your requirements but aren’t sure where to start, feel free to check out our requirements templates (these might be more involved than is appropriate for your needs, but it gives you a starting point):

- industrial embedded requirements and specification template.

- test system requirements and specification template.

Assuming all downloadable instrument drivers will just work out of the box. Some instrument drivers are of great quality, while others are horrible. You’ll want to know where your driver falls on the spectrum, but for sure don’t assume it’ll just work. Get a sense of driver quality from feedback on various forums.

Assuming your application will just work the first time out of the box. It likely won’t. Make your life less stressful and allocate debugging time up front. As a very loose data point, consider allocating anywhere from 50%-150% of the time you spent coding on debug, depending on the overall complexity of course.

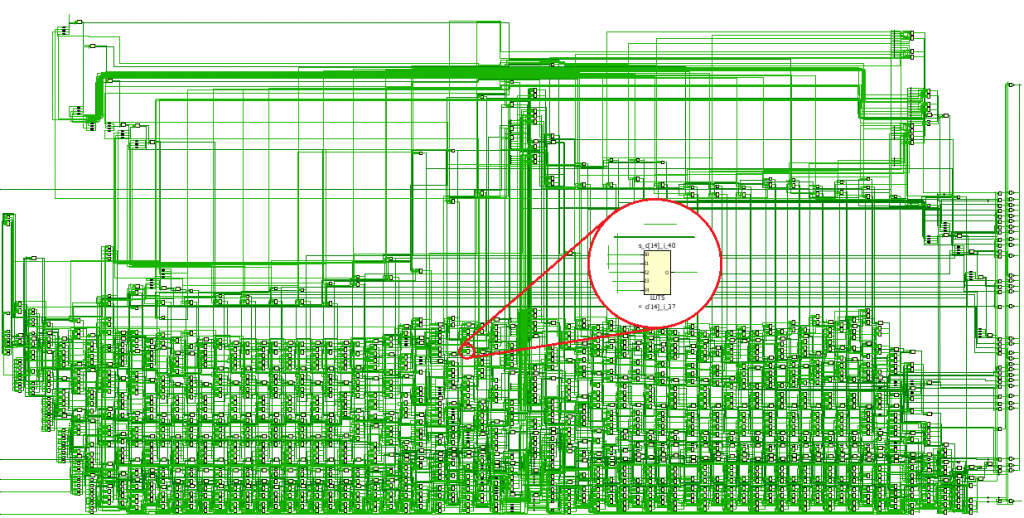

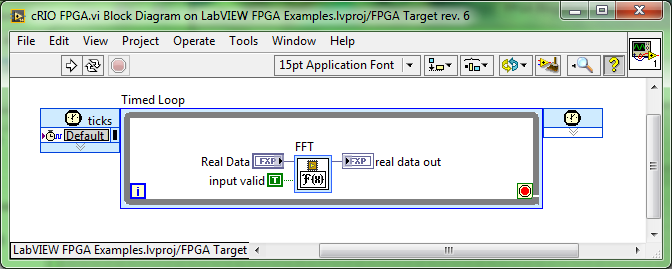

Not understanding what an FPGA is and how it works. You won’t always need an FPGA-based acquisition system, but if you do, you should understand that you’re coding a very different piece of hardware. If you’re looking to understand some of the very basics, see here: FPGA Basics – A Look Under the Hood.

Fundamentals – How to do it:

NI covers the basics well. See http://www.ni.com/white-paper/3536/en/. Also, check out this quick video from University of Minnesota: https://www.youtube.com/watch?v=GBhJk5Tnshc.

Tips & Tricks:

There are lots of ways to move data between loops in LabVIEW and to send commands along with the data to tell the receiver what to do with those data. Here are two methods, one tried-and-true and one which I bet you didn’t know: Communicating between Parallel Loops.

Another popular method leverages TCP/IP for communicating between sections of a single application or between multiple applications, either on the same PC or between PCs. Check out: Are You Using Network Streams?

Don’t find yourself knee-deep in a project only to find that you’ve got a sea of intermingled code, making it hard to differentiate what goes where and how to find files. Learn how to avoid this: Why Poor LabVIEW Project Organization Makes Your Code Stink.

If you want to do synchronous data acquisition across multiple channels, even if across boards, and perhaps even synchronized with output channels, you should check out: http://www.ni.com/product-documentation/4322/en/

LabVIEW uses data flow to sequence code execution. This approach offers inherent multitasking capabilities, including parallel tasks managed in multiple while loops. However, a desire arises to communicate between parallel tasks. Learn about several types of LabVIEW synchronization tools: Synchronization in LabVIEW.

How to get help:

If you’re looking for LabVIEW data acquisition help for your application, see here for how we can help solve your data acquisition problems.

Migrating NI Compact FieldPoint to cDAQ or cRIO

Migrating NI Compact FieldPoint to cDAQ or cRIO

March 2018

Are you currently using a Compact FieldPoint (cFP) device and want to know what you should be thinking about to transition to a CompactRIO or CompactDAQ platform?

Are you doing control inside the cFP?

Are you doing any control with your system? If so, is it being done within the cFP, or is it actually being done on a PC connected to the cFP?

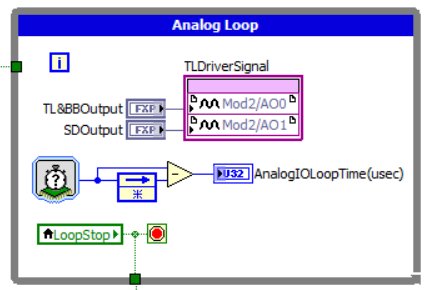

If you have control algorithms running at higher than 100 Hz, you’ll probably want to use a cRIO (or at least a cDAQ with an RT controller). The FPGA & real-time processor lend themselves well to high-rate deterministic loops, with the FPGA being able to run faster than RT.

Also, if you’re doing anything even remotely safety-related, you’ll want a cRIO, and sometimes you’ll even want to utilize a PLC or the FPGA side of the cRIO.

What can you do with the cFP that you can’t do with cDAQ?

I/O:

In general, beware of the size of the screw terminals available with cDAQ C Series modules; they tend to be smaller than are available with cFP modules. This smaller size can be an issue if your field wiring gauge is too big (i.e., smaller wire gauge number). Note that some C Series modules come in a couple connector styles that have built-in terminal blocks versus DSUB style connectors, the latter allowing cabling to larger terminal blocks with bigger screws.

Since there are many more types of C Series modules than cFP modules, you will almost certainly find a C Series module to replace your cFP module, but channel counts per module can be different (e.g., current output) and you might have to substitute a couple of C Series modules to accommodate all the I/O in a single cFP module (e.g., combined current input and output). The specifics of which C Series modules can replace the cFP modules are listed in the section below titled “Module limitations”.

Finally, note that, from an I/O module standpoint, many, but certainly not all, of the C Series modules are compatible with cDAQ (see here http://www.ni.com/product-documentation/8136/en/ for more).

Performance:

Being an older vintage, cFP is pretty limited from a CPU speed and memory capacity standpoint compared to a cDAQ with controller. Plus, the analog I/O in C Series typically has more bits than a comparable cFP module.

Using Controller or Just the I/O:

Originally, cDAQ chassis were used only for I/O and the “controller” was the PC you connected to the cDAQ chassis. Back around 2015, NI introduced a standalone cDAQ with a controller attached to a chassis for holding the I/O modules. If your cFP hardware is being used just for I/O and you are using a PC as the “controller”, you can continue with that same system design by using a traditional cDAQ module-only chassis. If you were using LabVIEW RT to run an application on the cFP controller, you can use a cDAQ controller system.

Software Application:

Whichever style of cDAQ you choose, you will have to rewrite some part of your application. Unfortunately, you can’t just move the cFP app onto cDAQ and expect it to work. The largest change will likely be in the I/O channel addressing, since cFP uses a completely different scheme for channel definition than DAQmx. But, since cFP applications followed a “single point” access scheme (rather than waveforms), the translation will be fairly straightforward, even if not trivial.

Environmental:

The cFP hardware has the same operational temperature range -40° to 70° C. the cFP has slightly better shock & vibration specs than the cDAQ hardware (50 g versus 30 g for shock, and 5 g vibration for both).

What can you do with cFP that you can’t do with cRIO?

Much of the remarks about moving to cDAQ from cFP also apply to moving to cRIO from cFP. However, some differences are listed below.

I/O:

The transition to C Series from cFP modules is the same for cRIO as cDAQ. Certainly, there are some C Series modules that don’t work with cDAQ and others that don’t work with cRIO, but none of these modules have comparable modules in cFP.

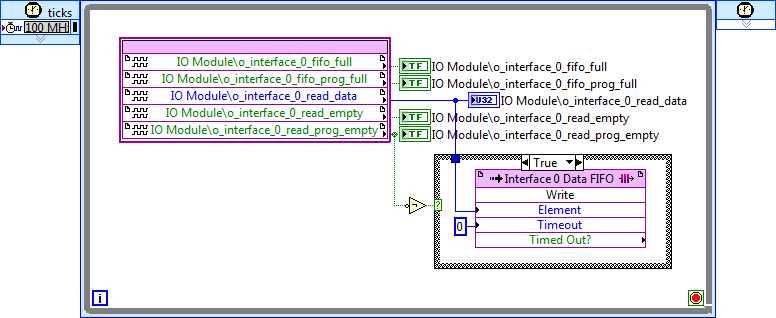

A point of difference is that using an FPGA to manage I/O via direct calls to the I/O channels, rather than going through FPGA Scan Engine (cRIO) or DAQmx (cDAQ) allows your cRIO much faster reaction. If your old cFP application depends on the slower responsiveness of the cFP controller and I/O, you may find that the cRIO system shows different behavior.

Performance:

The big change here, as alluded to earlier, is the availability of the FPGA in the cRIO. The cFP has nothing close to the performance capabilities available with an FPGA.

Software Application:

If your cFP application uses the cFP controller for the realtime capabilities, then a cRIO controller will give you all the capabilities you need to translate your application. As with cDAQ, the largest change will likely be in the I/O channel addressing, since cFP uses a completely different scheme for channel definition than Scan Engine or FPGA I/O. If you use the FPGA, while the I/O is programmed as single point access, you will still need to get those I/O values from your RT application layer into the FPGA, so there is another step involved. You’ll likely need to use a DMA FIFO.

Environmental:

The cRIO equipment can have the same operational temperature range as cFP of -40° to 70° C, but you can also purchase a cRIO controller with a smaller range of -20° to 55° C, so look for the extended temperature controllers if you need that wider range. Also, the cFP seems to have a slightly better shock & vibration specs than the cRIO hardware (50 g versus 30 g for shock, and 5 g vibration for both), but the cRIO controllers are tested to 30 g with a 11 ms half sine and 50 g with a 3 ms half sine over 18 shocks at 6 orientations. If it exists, it’s not easy to find from the NI literature exactly how the cFP equipment is tested for shock and vibration.

C Series Module Limitations

For the most part, whatever you could accomplish with a cFP from an I/O module standpoint, you can accomplish in a similar manner with a cRIO, with some notable limitations. See this NI white paper for more info: https://www.ni.com/en-us/support/documentation/supplemental/14/transitioning-from-compact-fieldpoint-to-compactrio.html.

Something to keep in mind as well is that there are 3rd party cRIO module manufacturers that may have what you’re looking for. See here: http://www.ni.com/product-documentation/2726/en/.

How to get help with a transition?

If you’d like help making the transition, Viewpoint can help. We used to use cFP back in the day, and we’ve used cDAQ & cRIO quite a bit. We’ve completed over 500 cRIO-based projects (see here for more). We’re also an NI Integration Partner.

Of course, there are tons of nuanced gotchas based on the specific cRIO/cDAQ model chosen, as well as your specific I/O needs. We can help you select modules and port code over to the new platform. To get started, it’s helpful to share whatever info you have revolving around: a hardware list, application overview, and source code. Feel free to reach out here to initiate a conversation.

Deep in learning mode? You might be interested in these resources:

- Online Monitoring of Industrial Equipment / Machines / Systems

- Which NI Platform is Right for Your Automated Test Needs? cRIO, PXI, cDAQ, sbRIO?

- NI Hardware Compatibility – real-world tips and lessons learned

- 6 Questions to Ask before you Buy a Standalone Data Acquisition System

- Continuous Monitoring & Data Acquisition from Large Industrial Equipment

- How to Diagnose Failing Custom Test Equipment

Why use LabVIEW

Why use LabVIEW?

There’s 5 main reasons to consider using LabVIEW:

- If you’re not a software developer but need to make quick measurements using real instruments – this requires little programming experience. However, to be clear, coding with LabVIEW requires you to follow good programming practices just like any other programming language. Like with any other programming language, garbage in equals garbage out.

- If you need tight integration between software and measurement/control hardware. LabVIEW has two targets that allow synchronization between measured inputs and calculated outputs. The first has on the order of 1 ms jitter and uses LabVIEW RT. The second has jitter on the order of 1 ns jitter and uses LabVIEW FPGA.

- Because your company is already heavily invested in the ecosystem – I know this sounds sort of like a “if Billy jumped off a bridge” analogy, but it’s not. While this isn’t my favorite reason to use LabVIEW, pragmatically, LabVIEW is a tool, and if your company is already heavily using this toolset, then you may be doing more harm than good by creating a separate parallel toolset to have to work with. Now you need to maintain multiple environments and maintain the expertise in two toolsets.

- It’s well-supported within the test & measurement community – from toolkits, to instrument drivers, to consultants that can step in if your main LabVIEW guy unexpectedly decides to leave your company.

- The development environment is user friendly.

- National Instruments works hard to make the LabVIEW development environment consistent across hardware platforms, from PCs running Windows to embedded controllers running a real-time Linux OS with connections to FPGAs for tight synchronization I/O. Most differences are included to take advantage of the resources of the specific platform so that the learning curve between platforms is about as small as it can get.

- The data flow paradigm inherent in LabVIEW makes coding parallel operations trivial.

- Each VI, which would be called a function in most other languages, comes with a user interface and a code block. Since you create a user interface for each function, debugging is much more visual than using user-defined breakpoints and probes.

- LabVIEW’s brings together all the code, hardware, and build definitions into one location under the Project Window.

Another closely related question that you might want an answer to is “What is LabVIEW used for?”. This article explains the main applications that LabVIEW is used for, with case studies, how LabVIEW interacts with the real world, and what hardware LabVIEW runs on. If you need LabVIEW help and want to know what your options are, check out this article on LabVIEW Help – What are my options?.

What is LabVIEW used for?

What is LabVIEW used for?

LabVIEW is used for 4 main purposes:

- Automated Manufacturing test of a component/sub-system/system.

- Automated Product design validation of a component/sub-system/system.

- Control and/or monitoring of a machine/piece of industrial equipment/process.

- Condition monitoring of a machine/piece of industrial equipment.

(If you need LabVIEW help and want to know what your options are, check out LabVIEW Help – What are my options?. If you’re looking for why you might consider using LabVIEW, see our article Why use LabVIEW. If you’re just looking for some basics about what LabVIEW is, see What is LabVIEW?)

There are likely some additional corner cases out there, but this covers the vast majority of applications we see at Viewpoint. Historically, LabVIEW has been widely adopted in the automated test realm, essentially becoming the de facto standard in that application space, whereas more recently it’s been gaining traction within the realm of industrial embedded monitoring and control.

LabVIEW is a software development environment created by National Instruments. Originally it was focused on taking measurements from various lab instruments, but it’s expanded drastically from its inception. Strictly speaking, LabVIEW is not a coding language, it’s a development environment. The language is actually called “G”, but most people refer to LabVIEW as if it’s a language (i.e., most people would say “it’s coded in LabVIEW”).

LabVIEW is graphically-based, meaning you drag around various building blocks and connect them in a data flow architecture. It’s similar to drawing a block diagram, except you’re drawing your code, as opposed to text-based languages like C# & VHDL where you type out in text what you want the software to do.

Poll – Test engineering leaders: What would help you most? Vote and see how your peers voted!

What basic functions can LabVIEW perform?

LabVIEW can be used to perform a huge number of mathematical and logic functions, including, but certainly not limited to: basic arithmetic, if/then/elseif conditional statements, case statements, FFTs, filtering, PID control loops, etc. There are huge libraries of functions to pull from. You can also interface to code developed in other languages, through DLLs. .NET assemblies, and run-time interpreters (e.g., MATLAB), for example.

Another somewhat unique capability that LabVIEW offers is real-time compilation and the ability to execute function blocks without requiring development of a test case. Each LabVIEW function is designed with a user interface so you can interact with your code immediately after you write it.

LabVIEW use case – Automated Manufacturing Test

Manufacturing test systems are used to verify your product is within spec before it leaves the plant. The main drivers for manufacturing test are usually (1) test consistency, (2) error reduction (3) throughput improvements and (4) increased reliability/uptime.

Here’s some good examples of manufacturing test systems:

Here are some good resources if you’re interested in more detail on manufacturing test systems:

LabVIEW use case – Automated Product Validation

Product validation systems are used during the design process to validate that the design works as intended, before production begins. The main driver for automating product validation is that the number of dimensions that need to be swept across (e.g. temperature, power supply voltages, pressure) can be large and take a lot of time (sometimes repeating over many cycles) to both collect and analyze the data.

Here’s some good examples of product validation systems:

LabVIEW use case – control and/or monitoring of industrial equipment & processes

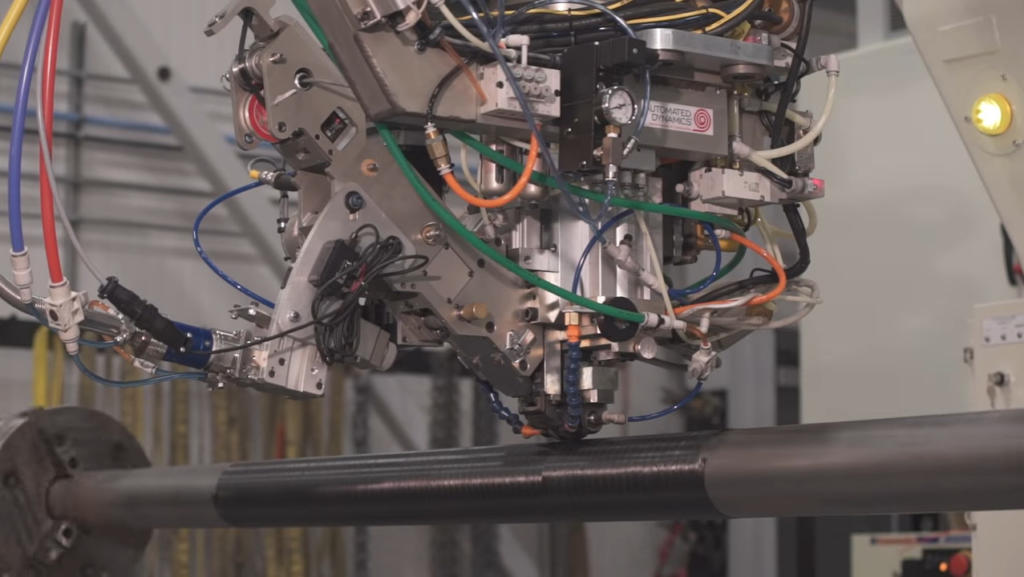

The main drivers for using LabVIEW (with NI hardware) for industrial embedded applications are: (1) rapid prototyping and development using off-the-shelf hardware (2) tight tolerance timing or (3) acquisition of high-speed signals.

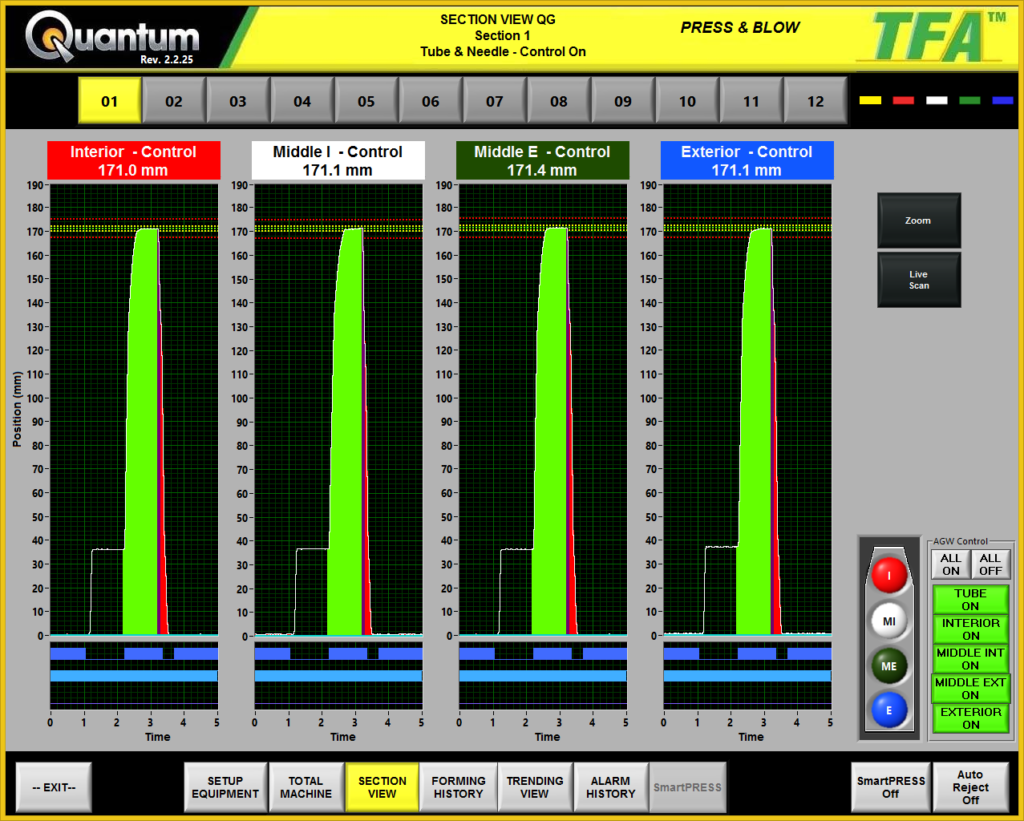

Here’s some good examples of industrial embedded systems:

LabVIEW use case – condition monitoring

The main drivers for condition monitoring are generally either (1) improving machine up-time/reliability or (2) reducing maintenance costs.

Some examples of condition monitoring applications include:

How does LabVIEW interact with the real world?

There are 4 ways that software developed with LabVIEW interacts with the real world (all requiring hardware with an appropriate processor on board (either desktop PC-based or SoC (System-On-Chip) based):

- A GUI – either with a standard monitor or touch panel.

- Interfacing with lab equipment/instruments (e.g. through GPIB, Ethernet, USB, PCI, RS-422) – for example power supplies, power meters, multi-meters, spectrum analyzers, oscilloscopes, switch matrices, and signal generators.

- Measuring a signal with NI hardware (analog or digital) – for example temperature, pressure, vibration, current, load, voltage, flow, light, acoustics, force, location/orientation, vision, humidity/moisture, RF emissions, and magnetic field.

- Controlling a signal with NI hardware (analog or digital) – for example motor control, actuator control, or mass-flow controllers.

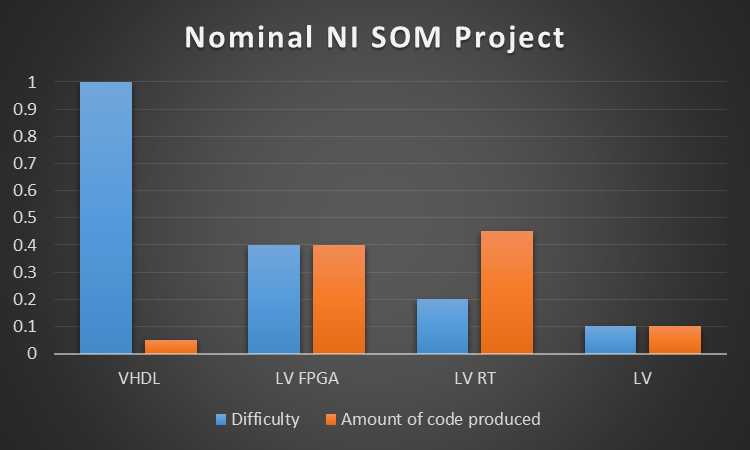

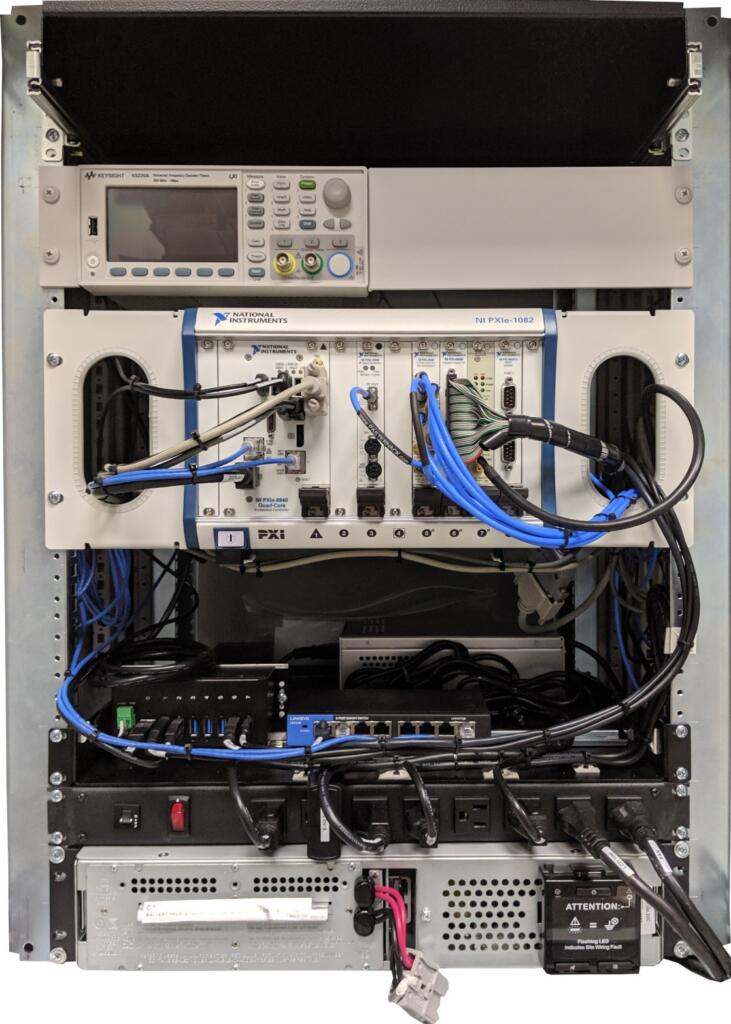

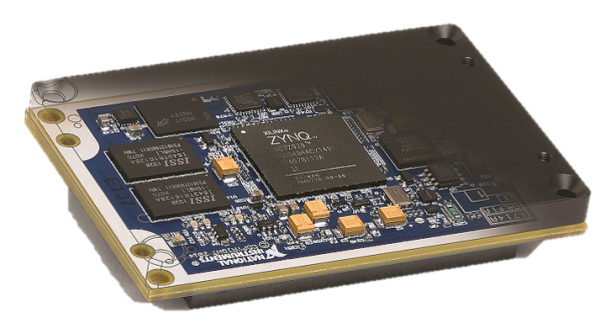

What hardware does LabVIEW run on?

LabVIEW can run on any of these platforms:

- A Windows-based PC

- A Windows-based PXI

- An NI CompactRIO

- An NI Single-Board RIO (including the NI SOM)

The specs of your application will drive your choice of hardware platform. Of course, you’ll want to be mindful of version compatibility as well.

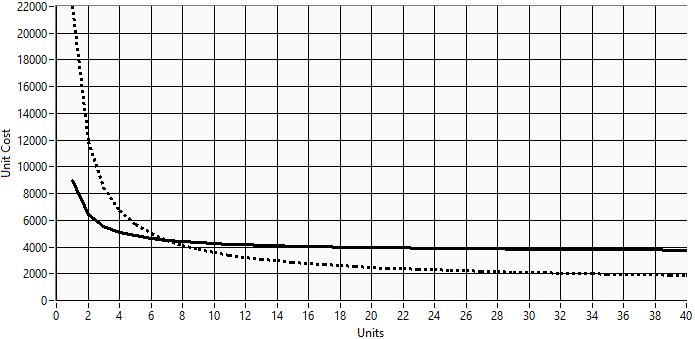

For embedded applications, you’ll generally want to default to using a cRIO (we love the cRIO and use it a LOT) and let your project requirements convince you that a different platform (e.g. an sbRIO or SOM) is warranted. There’s more details than provided here, but the decision process will usually be based on 3 main criteria (feel free to reach out here if you want to discuss those details):

- Size / envelope – if your application requires a small envelope, the cRIO form factor may just be too big and you’ll be forced to go the sbRIO route.

- Production volumes – at some quantity, it’ll likely make more sense from a financial standpoint to use the sbRIO.

- I/O availability – depending on how much of what you need from an I/O (including comm. interface) standpoint is available either as a module or part of the base unit, the custom board non-recurring engineering design costs may sway you one way or another.

For test system applications, check out our guide Which NI Platform is Right for Your Test Needs? cRIO, PXI, cDAQ, sbRIO?.

Next Steps

Other LabVIEW questions? Check out our LabVIEW FAQ page.

If you work for a US-based manufacturer or R&D lab, go here for next steps:

Use Of Embedded Systems in Industrial Automation

Use Of Embedded Systems in Industrial Automation

Use Cases & Case Studies

Use cases for embedded systems in industrial automation can be divided into two main classes: machine control and machine monitoring.

To make sure we’re on the same page, we’re NOT talking about test system automation. If you’re interested in that topic, please see here.

Use cases for embedded systems in industrial automation can be divided into two main classes: machine control and machine monitoring.

Embedded machine/equipment control – for this use case, the embedded system is controlling some aspect of the industrial machine/equipment. It might be controlling the tight tolerance timing of a particular manufacturing process, it might dynamically adapt production of the part to improve product quality, or it might control the operation of a piece of industrial equipment out in the field. Some examples of machine control applications include:

Machine/equipment monitoring – this could include generalized monitoring of a machine or it could be more focused specifically on condition monitoring, which generally has the objective of improving machine up-time/reliability and/or reducing maintenance costs and production loses. Some examples of machine/equipment monitoring applications include:

If you’re looking for help with using embedded systems for your industrial application, there are two things you’ll want to do to get started: (1) develop a good set of requirements (see here for an industrial embedded requirements template) and (2) find a vendor capable of helping you (check out this Outsourcing Industrial Embedded System Development Guide). If you want to chat about your application with us, you can reach out here.

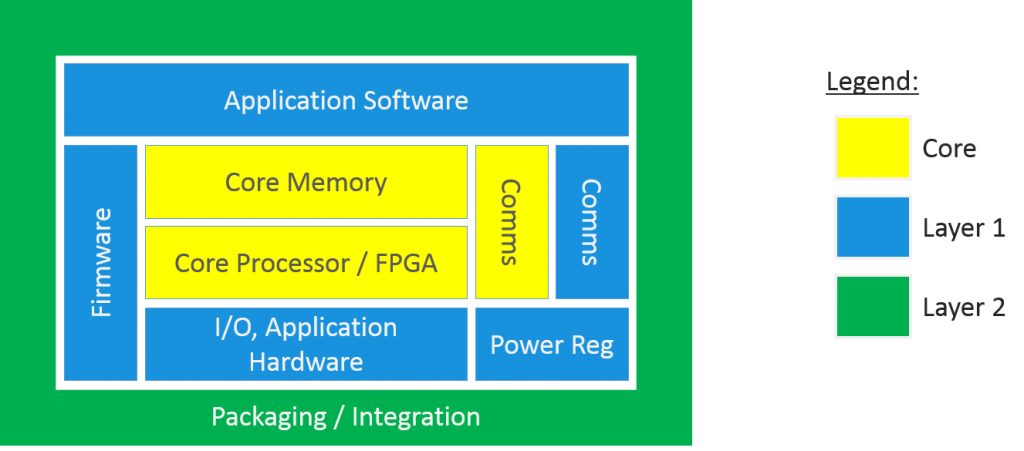

Improving Time to Market for Embedded Systems

Improving Time to Market for Embedded Systems

The design activities are critical to choosing a platform that will achieve the requirements as quickly as is reasonable

The Big Hurdles

When choosing to develop a new product or platform based on an embedded system, companies commit to spending time, money, and resources. There’s an enormous benefit to finishing this development as quickly as possible so that the product or platform can be released to the customers or users as quickly as possible. Fortunately, by using certain tools and methods, companies can in fact shorten the development cycle, and improve their time to market.

I want to make a small distinction between a product and platform. The distinction surrounds the type of user. Embedded systems developed for external use by general customers are labeled as a product. When developed for internal use by specific end-users, it is labeled as a platform.

Don’t minimize the prevalence of these internally-used platforms. I’m including controllers that are used inside a product sold to a customer (e.g., a controller that operates a forklift would qualify, or a condition monitoring device for a gas compressor would qualify).

The key point to be made about these two classes of users is that both need tech support, repair services, upgrades, and so on. So, I’m going to refer to a platform as a product too.

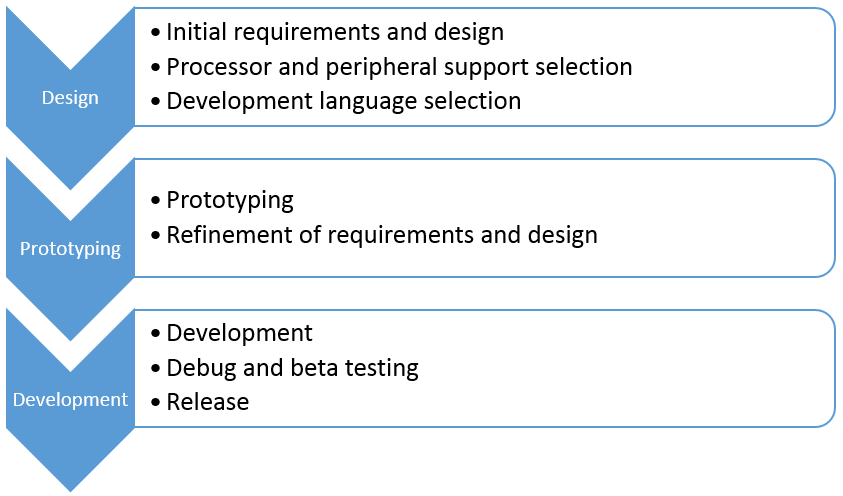

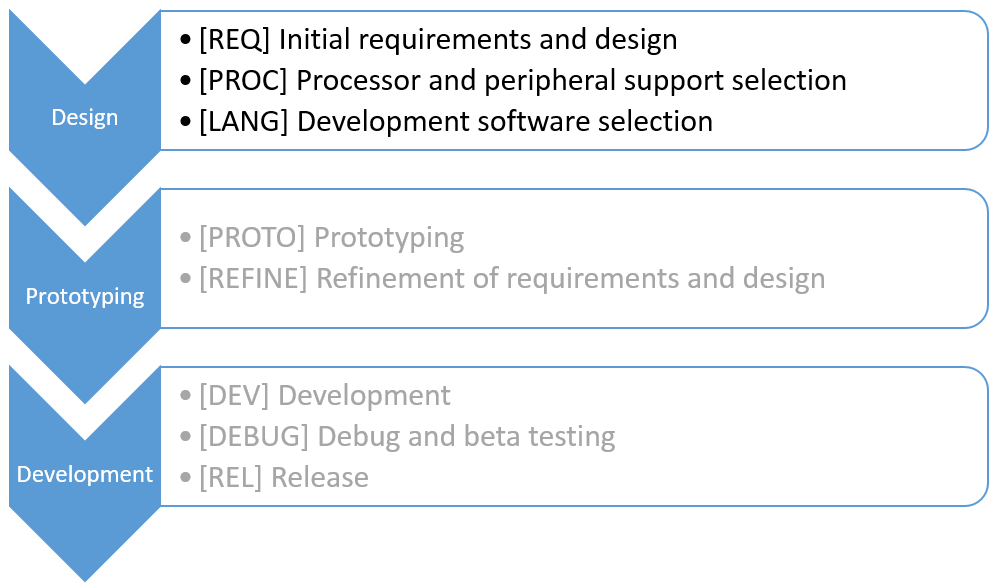

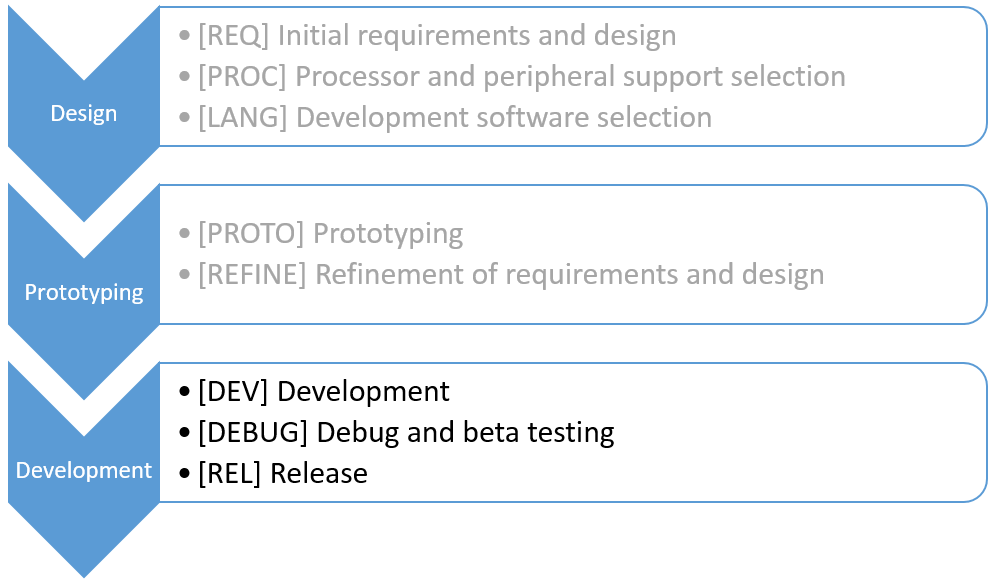

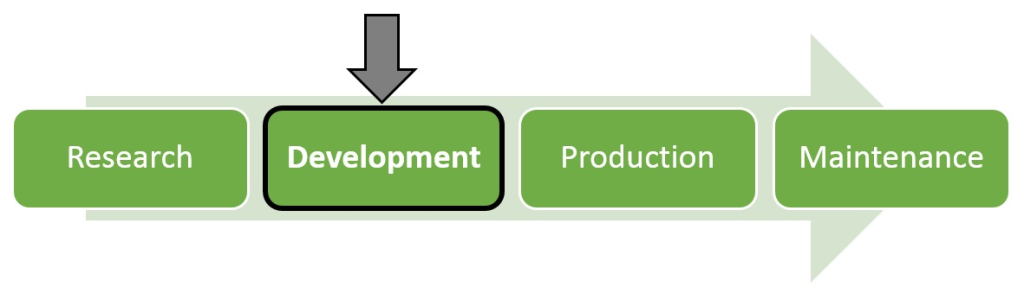

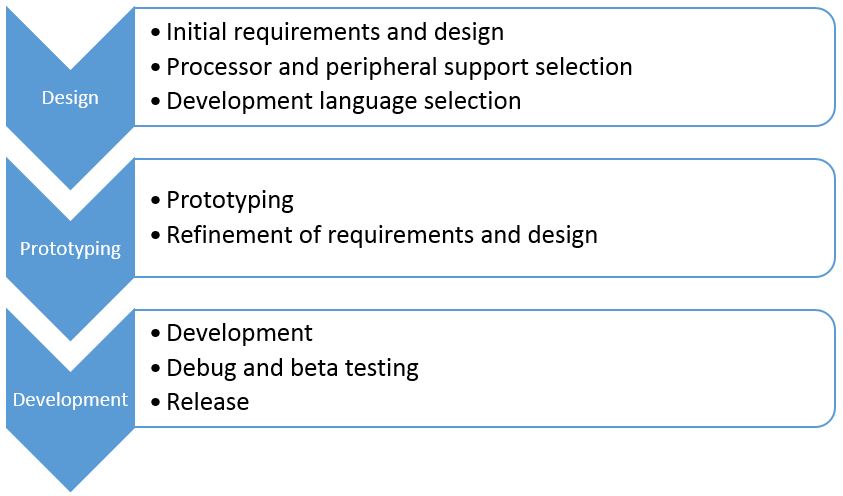

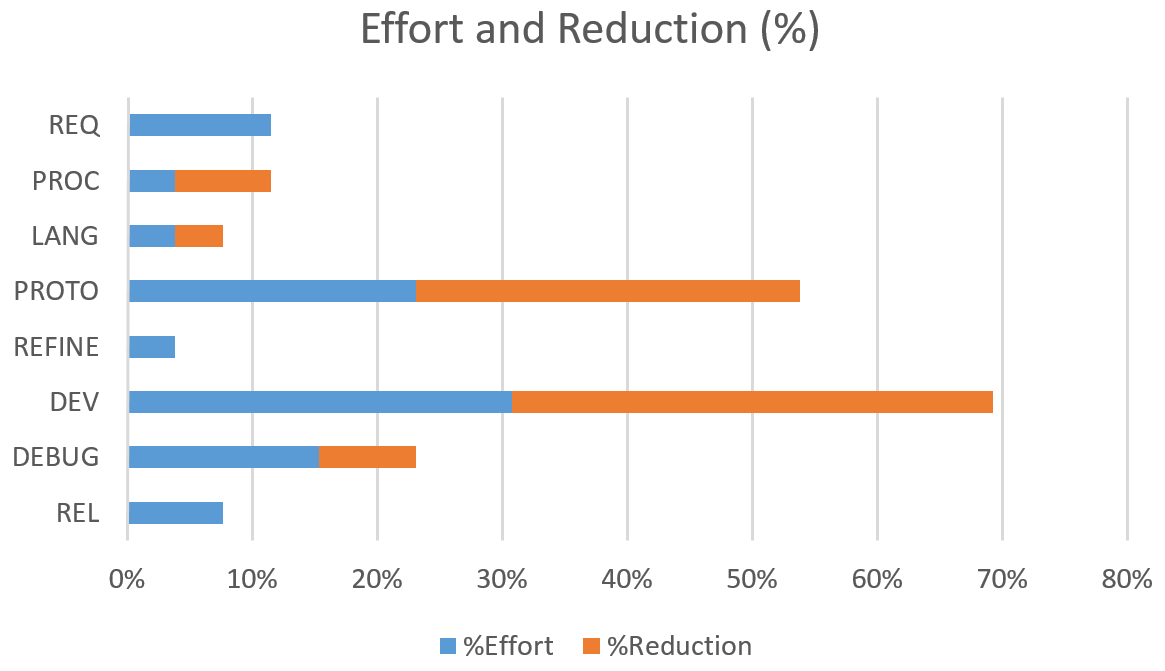

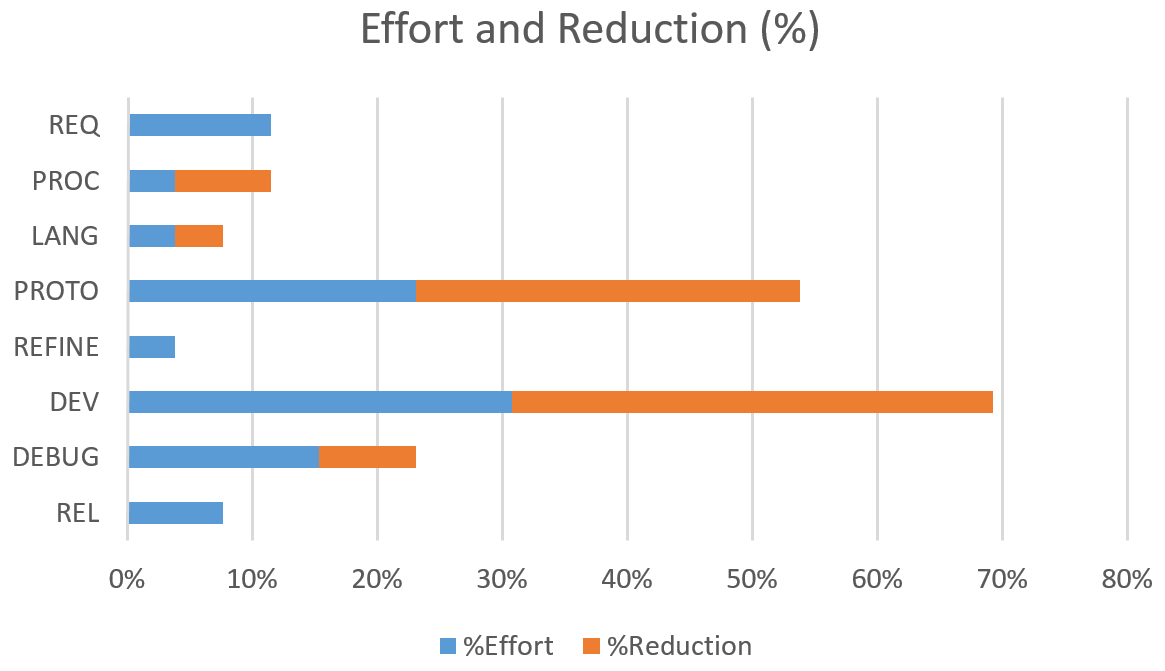

Here is a breakdown of the steps needed to bring a product to market:

I want to describe each of these items a bit in this post and will do a few “deep dives” in future posts.

As you might imagine, some of these groups associated with these steps are more amenable than others to doing things that improve time to market (TTM). I want to touch on these things quickly and leave some details to those future posts.

The design activities are critical to choosing a platform that will achieve the requirements as quickly as is reasonable. The outcome of this group of steps has a big impact on the TTM.

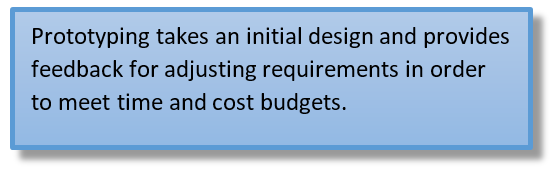

The prototype activities are also critical to achieving the requirements, but the way they impact the project is in identifying any changes to requirements and design based on the outcome of some initial proof-of-concept-level development and testing. This group is all about failing fast, quickly identifying weaknesses in the component and design choices.

The last group of development activities is less critical to achieving the requirements, and is instead mainly focused on completing the development. There are some tools and techniques that can help speed TTM, but I think these tools have less effect (or maybe a better term is “smaller levers”) than the effect that the other groups have.

So, with that setup, look forward to future posts on design, prototyping, and development when I give you some ideas to speed TTM for embedded systems.

Want more information on developing embedded systems? Read our white paper “Top Five Embedded System Design Fails.”

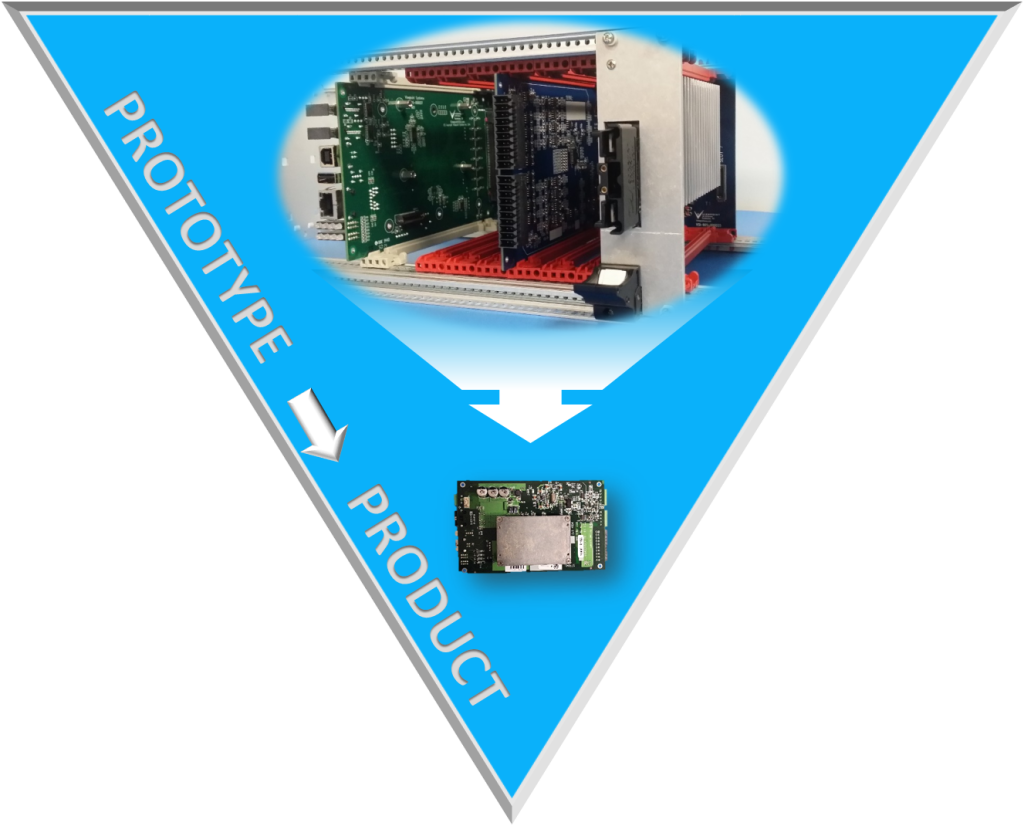

Tips for Reducing Embedded Prototyping Costs

2 Tips for Reducing Embedded Prototyping Costs

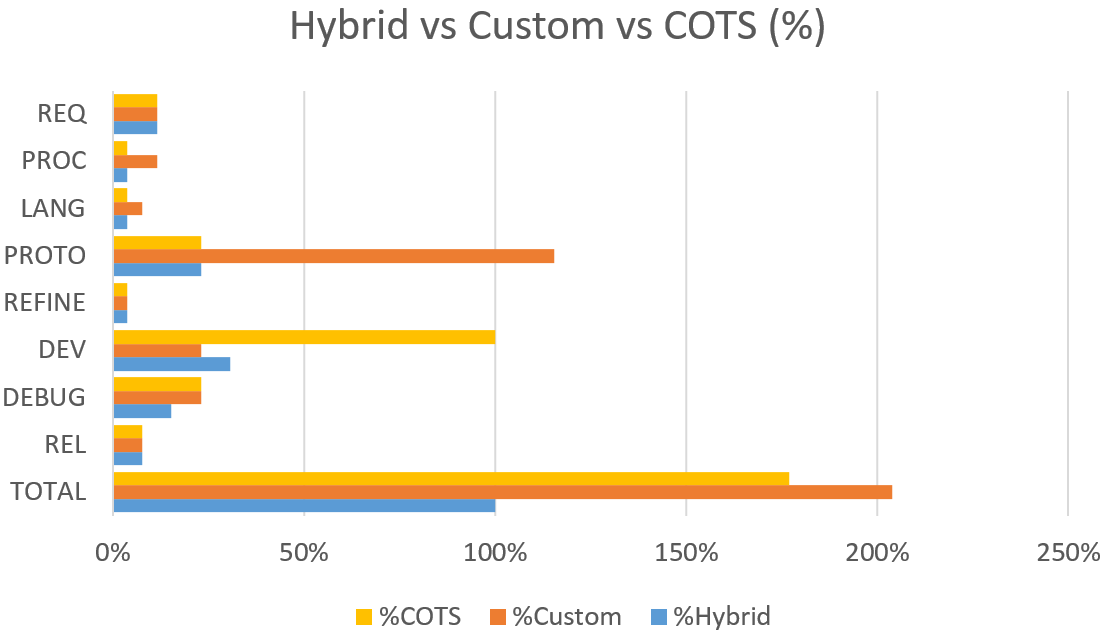

In just about every industry there is a drive to reduce cost when bringing new products to market. With regard to the world of embedded design, there are a few things that have been proven to consistently allow for teams, both big and small, to reduce the cost and time associated with new widgets.

To address some of these challenges, we’ve developed an ecosystem for embedded development called VERDI, to help reduce engineering costs and development risk

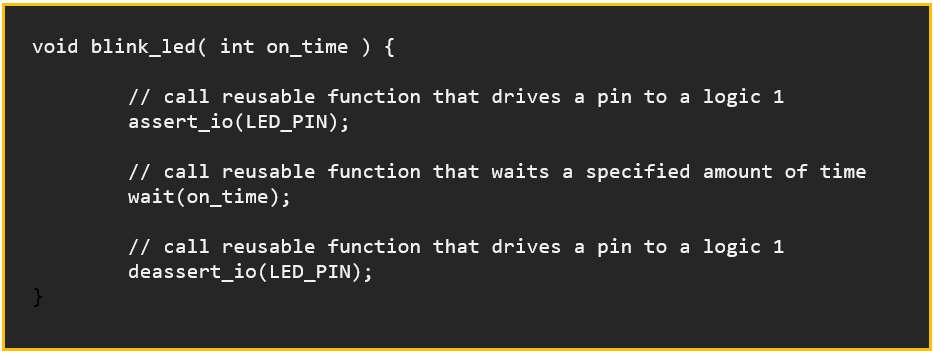

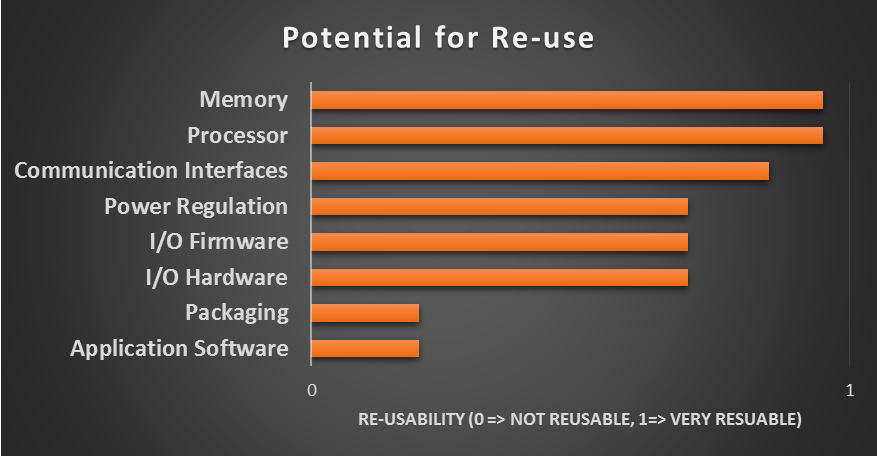

Tip 1 for Reducing Embedded Prototyping Costs – Reuse. Reuse. Reuse.

In the world of software (embedded or not), coders do their best to prevent copy and pasting code. We do this by creating reusable code blocks (commonly referred to as functions, but come in a variety of different names) that can be called upon to perform tasks by various different parts of the system. Examples would include converting between data types, performing complicated file IO, or manipulating data structures.

This reuse in code provides a few different things that make the coders’ lives easier:

- If the functionality of the code snippet has to change, you only have to change it in one spot.

- You don’t risk “copy pasting” errors by having very similar code all over with just slight differences.

- Testing complexity is significantly reduced.

Reusing code is usually a pretty easily understood concept, and thus is implemented most computing projects.

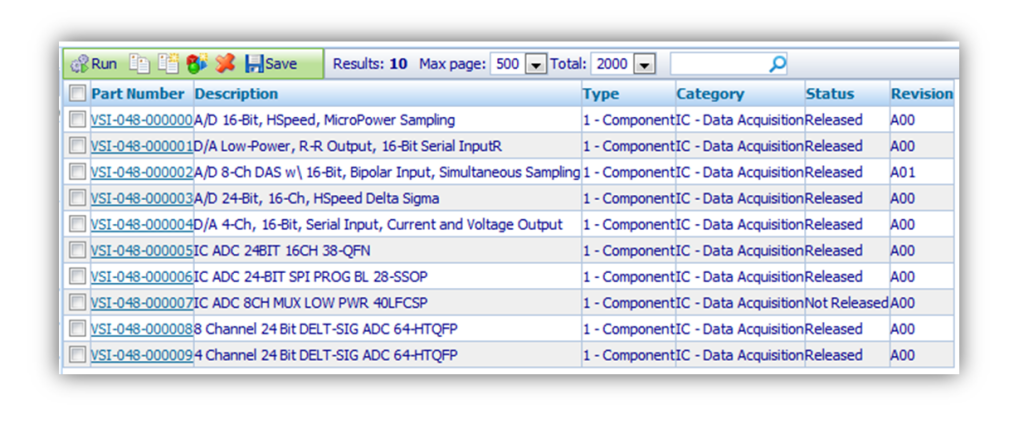

The other aspect of reusability to consider is the hardware perspective. This is commonly accomplished with libraries of components (Integrated Circuits, passives, interconnects, stencil artwork, etc.). Most embedded engineering teams have amassed quite the library of hundreds, thousands, or even tens of thousands of components that they have used over the years in their projects.

Organizing that many components can really be a challenge. There are, luckily, a number of software providers that assist with managing this sea of schematic, layout, and mechanical drawings. Some even allow for parametric search and meta-data assignment for internal use. The *really* good ones help with BOM export/management so buyers aren’t flying blind when they go to actually purchase the components that designers put on the boards.

Wait, aren’t we talking about saving money? Those tools sound expensive …

Tip 2 for Reducing Embedded Prototyping Costs – Yea. Tools cost money. So does not buying them.

I think my biggest trick for saving money in embedded designs is not cutting corners with tools for the design team. Too many times I’ve heard things like:

“ … we didn’t buy the debugger because it was $1,100, and we didn’t have that in the budget”

or

“ … we rented the cheapest scope we could for 6 months, because the engineers insisted we get one.”

Meanwhile they’re 6 weeks past their final milestone because there was a null pointer reference deep in their SPI Flash library, or they couldn’t see the overshoot on their clock because the scope only had 500 MHz of bandwidth. Sure, Michelangelo probably would have been able to paint the Sistine Chapel with a toothbrush, but for the rest of the artists out there, spending the money on high-quality brushes helps them produce a higher-quality output product. Engineers and their tools are no different.

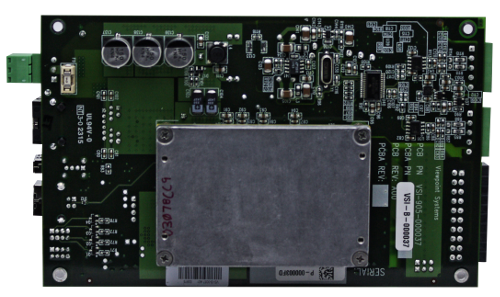

The next level of reuse is entire hardware designs, not just the single components within them. Although it does happen that hardware designs do not use software, with the increased complexity of ICs on the market hardware designs are becoming more and more depending on software to work correctly. This pairing of hardware and software in reusable, modular packages allows for massive reductions in cost and risk.

Pulling from a library of proven hardware designs is not uncommon when moving between versions of products, or producing tech refreshes for existing product lines. This, of course, requires the team to first create the product from scratch, and then build off of it. Sounds great, but building up that library to pull from can be hundreds of thousands to millions of dollars – even for a small library.

To address some of these challenges, we’ve developed an ecosystem for embedded development called VERDI, to help reduce engineering costs and development risk. Check it out and let us know what you think:

When Is an FPGA Worth it and When is it NOT – when developing an Industrial Embedded System – Part 2

When Is an FPGA Worth it and When is it NOT – when developing an Industrial Embedded System – Part 2

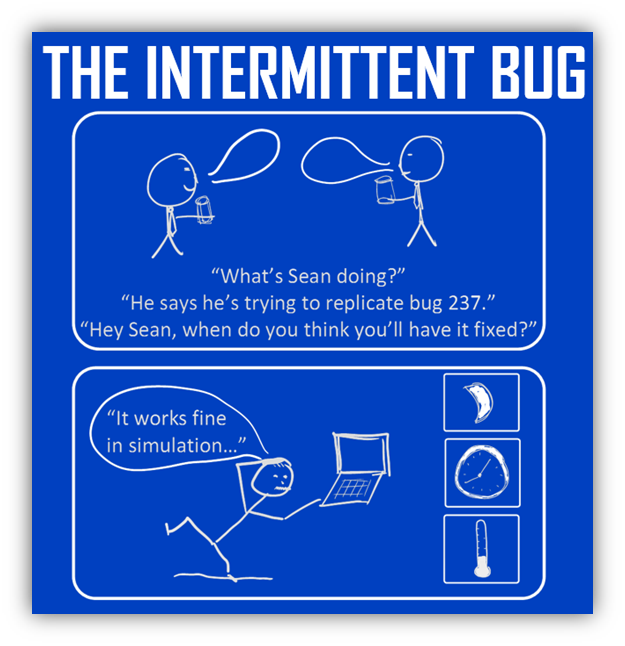

Simulation environments for FPGAs are generally pretty solid, but you eventually have to move into hardware, where your visibility into what’s going on decreases significantly

In part 1 of this article, we introduced some well-suited applications for FPGAs, and highlighted some strengths and weaknesses of FPGAs. Now we transition over to some cautionary elements of utilizing FPGAs.

Some Things to be Mindful Of

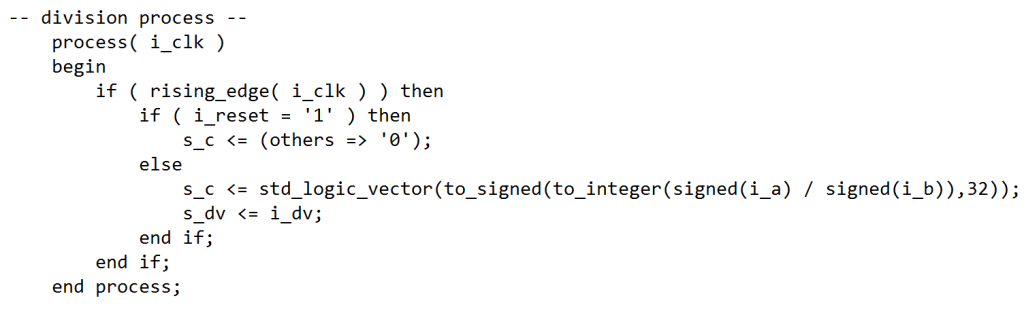

Traditional development environments that tend to utilize text-based languages such as VHDL and Verilog are NOT for the casual user. The languages and the tool chains have a steep learning curve and the tool chains can be expensive.

Debugging tools –

The disadvantage of a run-of-the-mill sequential processor is that only one operation is executing at any point in time. The advantage of a sequential processor is that only one operation is executing at any point in time. When it comes to debugging, this sequencing makes life easier than debugging an FPGA. Simulation environments for FPGAs are generally pretty solid, but you eventually have to move into hardware, where your visibility into what’s going on decreases significantly. You can view outputs of course, and you can create test points, but you have to be able to probe all of those points, so you’ll need a logic analyzer, which can get very pricey. You may be able to get away with embedding test resources into your device (e.g. Xilinx has the Integrated Logic Analyzer), but this will utilize FPGA logic and memory resources, and is often challenging to see enough breadth or depth about what’s going on inside your FPGA. However, generally these tools are better for augmenting a true logic analyzer, as opposed to replacing them outright.

Cyber security –

A lot of FPGAs are embedding Ethernet cores, common processor cores, and some are even running an OS, making it so FPGA-based (sometimes referred to as SoC (system on a chip)) solutions look like another computer on the network. This opens up their vulnerability to more traditional attack methods. Take the time to understand your risks and mitigate them. Obscurity is generally NOT a solid security approach (check out The Great Debate: Security by Obscurity). Here are two articles to get you thinking:

- How to build an FPGA-based I&C

- Microsemi Steps Up Its Cyber Security Leadership in FPGAs: SmartFusion2 SoC FPGAs and IGLOO2 FPGAs Enhanced with Physically Unclonable Function Technology

Safety –

If you’re considering an FPGA-based system for a safety-related function, you need to understand the risks that you’re incurring. Here are a few articles to get you thinking:

- Safe FPGA Design Practices for Instrumentation and Control in Nuclear Plants

- SRAM-Based FPGA Systems for Safety-Critical Applications: A Survey on Design Standards and Proposed Methodologies

- Xilinx Reduces Risk and Increases Efficiency for IEC61508 and ISO26262 Certified Safety Applications

Where You Might Head From Here:

Hopefully these thoughts have given you some things to chew on. If you decide it might make sense to proceed with an FPGA-based embedded system and don’t have the time or manpower to create your own solution, check out VERDI. If you’re interested in other industrial embedded system info, check out our resources page.

When is an FPGA Worth it and When is it NOT – when developing an Industrial Embedded System – Part 1

When is an FPGA Worth it and When is it NOT – when developing an Industrial Embedded System – Part 1

As FPGA prices continue to drop, I speculate we’ll see more and more advanced industrial equipment and machines (with particular needs) taking advantage of FPGA-based systems

So you keep hearing about FPGAs being utilized in more and more applications, but aren’t sure whether it makes sense to switch to a new technology. Or maybe you’re just getting into the embedded world and want to figure out if an FPGA-based system makes sense for you or not.

One of the first questions you should be asking yourself on this topic is: relative to what? What are we comparing an FPGA-based solution to? Mostly we’re comparing to scenarios where general-purpose microprocessors or microcontrollers are being considered as the alternative technology.

Well-Suited Applications

Historically, FPGAs were VERY expensive (like up to thousands-of-dollars-per-chip, expensive), which limited the applications that utilized FPGAs to VERY expensive systems, such as military and aerospace systems. See Wikipedia – FPGA Applications for more use cases.

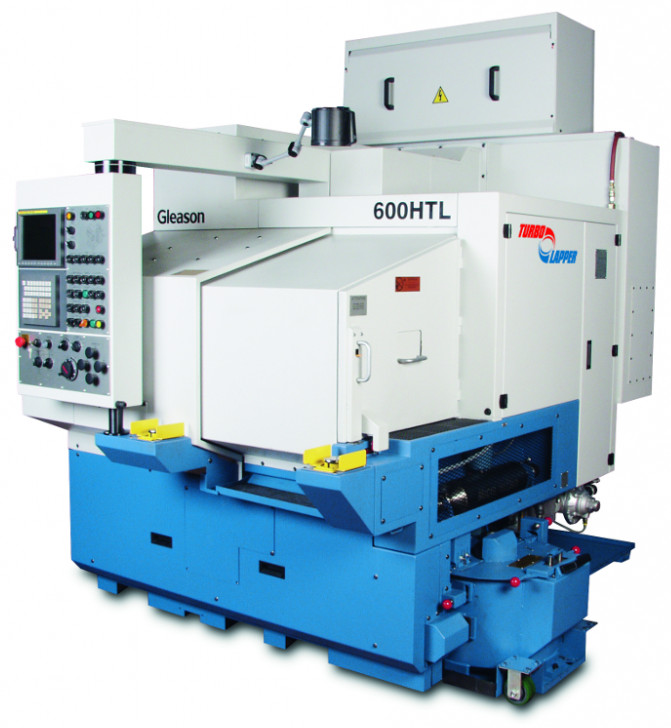

As FPGA prices continue to drop, I speculate we’ll see more and more advanced industrial equipment and machines (with particular needs) taking advantage of FPGA-based systems. Here are a few that we’ve seen:

- Industrial machine – Gear lapping

- Electrical Energy – VAR compensator

- Structural health monitoring – Railroad viaduct

- Electrical Energy – Remote monitoring

- Electrical Energy – Generator Condition Monitoring

- Industrial Equipment – Compressor Condition Monitoring

FPGA Strengths/Best Suited:

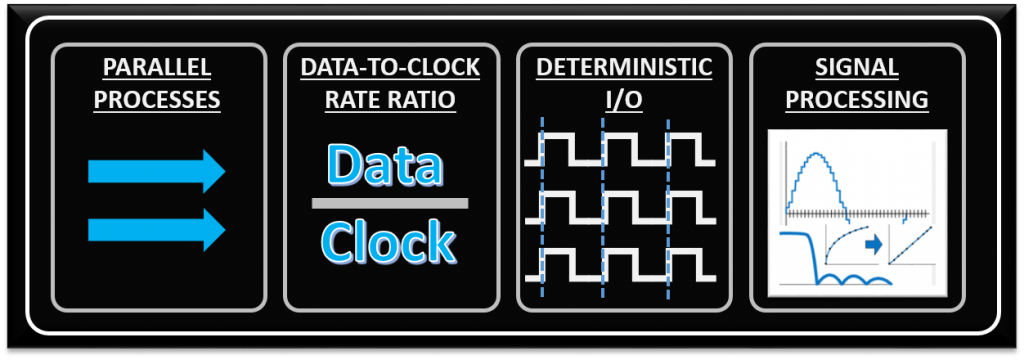

Much of what will make it worthwhile to utilize an FPGA comes down to the low-level functions being performed within the device. There are four processing/algorithm attributes defined below that FPGAs are generally well-suited for. While just one of these needs may drive you toward an FPGA, the more of these your application has, the more an FPGA-based solution will appeal.

- Parallel processes – if you need to process several input channels of information (e.g. many simultaneous A/D channels) or control several channels at once (e.g. several PID loops).

- High data-to-clock-rate-ratio – if you’ve got lots of calculations that need to be executed over and over and over again, essentially continuously. The advantage is that you’re not tying up a centralized processor. Each function can operate on its own.

- Large quantities of deterministic I/O – the amount of determinism that you can achieve with an FPGA will usually far surpass that of a typical sequential processor. If there are too many operations within your required loop rate on a sequential processor, you may not even have enough time to close the loop to update all of the I/O within the allotted time.

- Signal processing – includes algorithms such as digital filtering, demodulation, detection algorithms, frequency domain processing, image processing, or control algorithms.

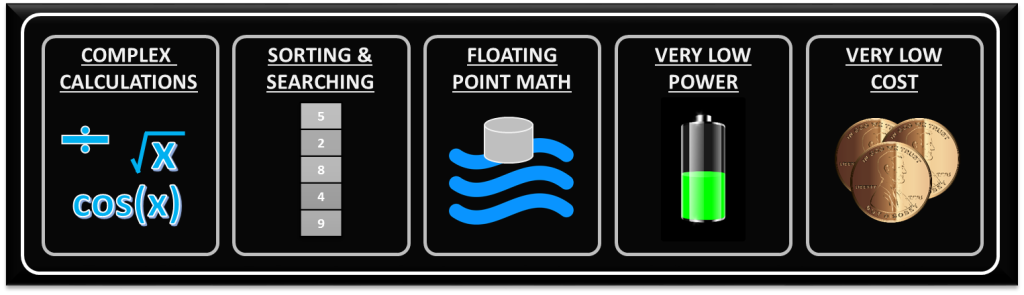

Weaknesses/Non-Optimal:

With any significant benefit, there’s often times a corresponding cost. In the case of FPGAs, the following are generally the main disadvantages of FPGA-based solutions.

- Complex calculations infrequently – If the majority of your algorithms only need to make a computation less than 1% of the time, you’ve generally still allocated those logic resources for a particular function (there are exceptions to this), so they’re still sitting there on your FPGA, not doing anything useful for a significant amount of time.

- Sorting/searching – this really falls into the category of a sequential process. There are algorithms that attempt to reduce the number of computations involved, but in general, this is a sequential process that doesn’t easily lend itself to efficient use of parallel logical resources. Check out the sorting section here and check out this article here for some more info.

- Floating point arithmetic – historically, the basic arithmetic elements within an FPGA have been fixed-point binary elements at their core. In some cases, floating point math can be achieved (see Xilinx FP Operator and Altera FP White Paper ), but it will chew up a lot of logical resources. Be mindful of single-precision vs double-precision, as well as deviations from standards. However, this FPGA weakness appears to be starting to fade, as hardened floating-point DSP blocks are starting to be embedded within some FPGAs (see Altera Arria 10 Hard Floating Point DSP Block).

- Very low power – Some FPGAs have low power modes (hibernate and/or suspend) to help reduce current consumption, and some may require external mode control ICs to get the most out of this. Check out an example low power mode FPGA here. There are both static and dynamic aspects to power consumption. Check out these power estimation spreadsheets to start to get a sense of power utilization under various conditions. However, if low power is critical, you can generally do better power-wise with low-power architected microprocessors or microcontrollers.

- Very low cost – while FPGA costs have come down drastically over the last decade or so, they are still generally more expensive than sequential processors.

Some Things to be Mindful Of

Stay tuned for part 2 of this article, where I’ll throw out the caution flag and highlight some areas to be mindful of, revolving around debugging tools, cyber security, and safety.

Where You Might Head From Here:

Hopefully these thoughts have given you some things to chew on. If you decide it might make sense to proceed with an FPGA-based embedded system and don’t have the time or manpower to create your own solution, check out VERDI. If you’d like more useful info on industrial embedded systems, check out our resources page here. You can check out part 2 of this article here.

The Embedded Design Checklist – For New Designs

The Embedded Design Checklist – For New Designs

It’s easy to just assign tasks to the team based on approximate time-loading, however this can be troublesome in a number of ways

Beginning a new embedded design is both exciting and daunting. There are many aspects of starting a new design that are fun and engaging, however it’s important to step back and make sure you’ve made the right decisions and assumptions up-front, to reduce headaches later. Below is a simple checklist you can use when starting a new design.

Does everyone have a firm grasp of the scope of the project?

Most embedded projects seem simple when designing on the white board, but it’s often the case that large parts of the design aren’t yet known, or are not well communicated to the system architect. Make sure you work with your external customer(s) as well as your internal team to ensure all stakeholders have a firm grasp on the scope of the system. One of the worst phrases you can hear at demo time is “well, where is feature X?”.

Does everyone know their role?

It’s easy to just assign tasks to the team based on approximate time-loading, however this can be troublesome in a number of ways. Since each member of your team probably doesn’t have the exact same skill sets, the team should work together to best understand which tasks are the best fit for each member. It is, of course, important to continue to challenge yourself and team members, however if the project isn’t going to say on budget because a member needs to learn too many skills to complete a task, that doesn’t make sense. This touches on the next checklist item …

Does everyone have the training they need?

Not every new design is going to be “cookie cutter”. It’s very possible that a customer ( internal or external ) requires your new design to use a technology that no one on your team has experience in. This could be as simple as a transmission protocol, or as complicated as a brand new processor, DSP, or FPGA platform/architecture. It’s important to understand what training may be needed, build that cost into the project, and set expectations appropriately with all stakeholders associated with the project on how long it may take to bring everyone up to speed on the new technology.

Has someone done a ‘back of the napkin timeline’?

A big part of engineering is sanity-checking your decisions ( calculations and assumptions ) with estimation. You should always be able to look at a design or problem and get a rough order of magnitude of how large of a problem this is ( this statement obviously falls apart for very large programs such as the space shuttle, but for the argument of this blog post let’s assume this is just for embedded devices and smaller ). Being able to understand what the goal timeline, and what a realistic timeline ( and hopefully those match up closely ) is, will help you continue to set expectations with stakeholders, and better work with your team to meet milestones. There will always be projects that end up behind schedule and will be stressful. However, by having an understanding of what a possible timeline is before starting the project can greatly help reduce stress going in.

Do you have a block diagram?

Spend some time with a whiteboard and your team. Get everyone in the same room with a large, blank whiteboard and go to town. Start with the central processing IC ( Processor, DSP, FPGA, SoC, etc. ), and move outward. Most embedded systems need Memory ( both volatile and non-volatile ), power management, and communications ports. Additional components might include Analog to Digital and Digital to Analog converters. Get as much of the system down onto the Whiteboard as you can, and then start taking pictures. It is also a good idea to have a dedicated note taker for each meeting, and have those meeting notes posted for all team members to see ( it may also be appropriate to send these notes and the block diagram to other stakeholders ).

Has the team settled on what Software Tools will be used?